How to Use Your Architectural Models to Develop Design Concepts with Artificial Intelligence (AI) Welcome to this lesson! We�re going to guide you through the process of utilizing your own architectural models to develop design concepts using the power...

How to Use Your Architectural Models to Develop Design Concepts with Artificial Intelligence (AI)

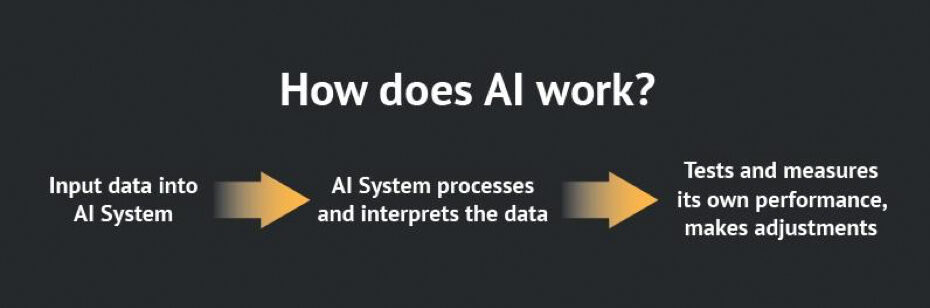

Welcome to this lesson! We�re going to guide you through the process of utilizing your own architectural models to develop design concepts using the power of artificial intelligence. By the end of this lesson, you�ll have the knowledge of how to combine your architectural models with AI technology and quickly generate design concepts.

Tools we�re using for this tutorial:

3D Model Render (we�re using our own)

Stable Diffusion, a text-to-image app

ControlNet, an extension for Stable Diffusion that helps you control the composition of your AI-generated image�

Step 1: Install Stable Diffusion/Run Online Version

To begin, you�ll need to install Stable Diffusion locally on your computer (for Windows users) or use the online version of Stable Diffusion (for Mac users). Once you have it installed or are running the online version, you�ll gain access to a world of possibilities! You�ll be able to run ControlNet, utilize your own models, and enjoy many more features.

How to Install Stable Diffusion/Run the Online Version

Windows Users: A guide to installing can be found here https://youtu.be/Po-ykkCLE6M.

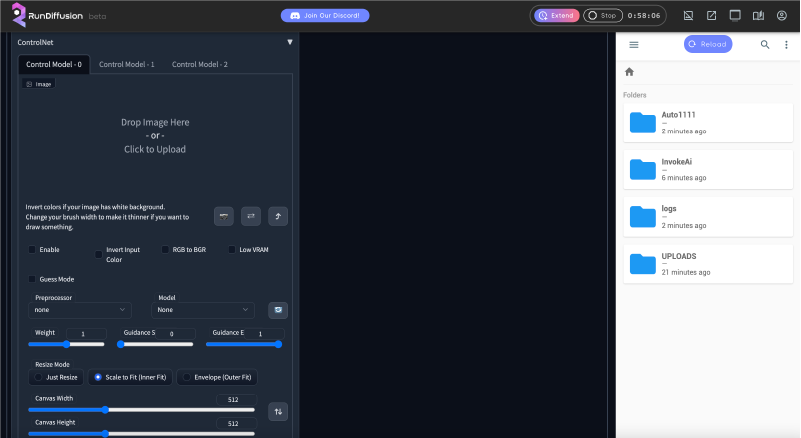

Mac Users: You can run Stable Diffusion through this website, https://app.rundiffusion.com/, it is an hourly cost that varies depending on your needs, but it�s very inexpensive and runs on a timer that you can start/stop anytime. RunDiffusion is on a cloud-based server, and will give you the same results as the downloadable version.

Quick Tips for Improving Your Renderings

Start with a high-quality sketch. AI thrives on the quality of the input that it receives, so it�s important to make your sketch easily interpretable. One way to do this is by using various line weights. By incorporating different line weights, it will enable the AI to figure out the depth and background of your sketches more accurately.

Additionally, we recommend using initial renders based on as detailed 3D models as possible. The best solution is to take the architectural models from professional 3D model libraries, such as�3DModels.org, CGTrader, Sketchfab, or Turbosquid.

Use Proper Settings. In the upper left corner of the interface, there is a drop-down menu called �Stable Diffusion Checkpoint.� This is where you can select the model to suit your needs and enhance your creative process. Based on a recommendation, we chose to use the model �realisticVision_V20.�

Adjust the CGF Scale. CGF is an abbreviation for Classifier Free Guidance, and is a parameter that controls how much the image generation process follows the text prompt. Super important, right? In theory, the higher the CGF value, the more strictly the AI will follow your prompt. Doing so will increase the time of generating your renders, but it�s very minimal.

Step 2: Using ControlNet to Upload Your Architectural Model Image�

Once you�re up and running in the Stable Diffusion app, let�s navigate to the ControlNet tab located in the bottom left of the interface. Here you will upload your sketch image and ensure it is recognized and utilized in your prompt by checking the �Enable� checkbox. Don�t forget this step, as it enables Stable Diffusion to incorporate your uploaded sketch effectively.

Next, find the dropdown menu right below the �Enable� checkbox, called Preprocessor. Click on it and select the option �Scribble.� We chose this option as it is used for optimizing simple black and white line drawings and sketches, like our model! Additionally, in the adjacent Model dropdown menu, choose �ScribbleV10� for compatibility with the Scribble preprocessor.

Our starting 3d model render.

Step 3: Bring your models to life.�

Now it�s time to create your text prompt and begin giving life to your models. You�ll have the opportunity to input up to 70 words in the prompt. Provide a clear, detailed description of your desired outcome.

Negative Prompt Text Input Area

Once you get your image generated, you may want to make edits. Look for another input box located right below your initial prompt called �Negative prompt.� It allows you to subtract specific elements from your generated render as necessary. For example, you can mention things like �no other buildings in the area� or, in our particular case, �only 1 front door.� This way, you have the power to fine-tune your output.

Our Results:

Prompt #1:

Text input: realistic house design, arched windows, field stone, white trim, darker realistic night sky behind building, concrete street in the foreground of the image, grass and driveway between the street and the house, lights illuminating from inside the house, same perspective

Prompt #2:

Text input: realistic house design, arched windows, field stone, turquoise color house siding, white trim, daytime sunny sky behind building, concrete street in the foreground of the image, grass and driveway between the street and the house, lights illuminating from inside the house, same perspective, palm trees

In Conclusion

The AI apps are only as smart as the user makes them. Remember, the more descriptive you are in your text prompts, the more accurate your outputs will be. Experiment a bit, a lot of this is trial and error. Once you get your results, you can continue to adjust your prompts as needed to get closer to your desired results.

This resource is an excellent tool for generating design concepts quickly and rather impressively! In a matter of minutes we had stunning design concepts, and we could quickly create many different versions. It�s a great way to streamline our design process and spark inspiration.

FAQs

Why download Stable Diffusion locally onto your computer?

It opens up the possibilities of what you can create with it. You can get around word restrictions, use your own models, get it to model an exact pose, set up DeForum with it for video, plus many other features.

Is there a cost for running Stable Diffusion online?

Yes, there is an hourly cost which varies with your needs. There is a $5 minimum for adding funds.Where�can�I�find�3D�models?

The post How to Use Your Architectural Models to Develop Design Concepts with Artificial Intelligence (AI) appeared first on Triplet 3D.