How to Implement a Custom Training Solution Using Basic (Unmanaged) Cloud Service APIs`Photo by Aditya Chinchure on UnsplashIn previous posts (e.g., here) we have expanded on the benefits of developing AI models in the cloud. Machine Learning projects, especially large ones,...

How to Implement a Custom Training Solution Using Basic (Unmanaged) Cloud Service APIs

`Photo by Aditya Chinchure on Unsplash

`Photo by Aditya Chinchure on UnsplashIn previous posts (e.g., here) we have expanded on the benefits of developing AI models in the cloud. Machine Learning projects, especially large ones, typically require access to specialized machinery (e.g., training accelerators), the ability to scale at will, an appropriate infrastructure for maintaining large amounts of data, and tools for managing large-scale experimentation. Cloud service providers such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer a great number of services that are targeted at AI development ranging from low-level infrastructure (e.g., GPUs and virtually infinite object-storage) to highly automated tooling for creating custom ML models (e.g., AWS AutoML). Managed training services (such as Amazon SageMaker, Google Vertex AI, and Microsoft Azure ML) in particular, have made training in the cloud especially easy and increased accessibility to prospective ML engineers. To use a managed ML service all you need to do is specify your desired instance type, choose an ML framework and version, and point to your training script, and the service will automatically start up the chosen instances with the requested environment, run the script to train the AI model, save the resultant artifacts to a persistent storage location, and tear everything down upon completion.

While a managed training service might be the ideal solution for many ML developers, as we will see, there are some occasions that warrant running directly on “unmanaged” machine instances and training your models in an unmediated fashion. In these situations, i.e., in the absence of an official management layer, it may be desirable to include a custom solution for controlling and monitoring your training experiments. In this post, we will propose a few steps for building a very simple “poor man’s” solution for managing training experiments using the APIs of low-level unmanaged cloud services.

We will begin by noting some of the motivations for training on unmanaged machines rather than via managed services. Next, we will identify some of the basic training management features that we desire. Finally, we will demonstrate one way to implement a simple management system using GCPs APIs for creating VM instances.

Although we will demonstrate our solution on GCP, similar solutions can be developed just as easily on alternative cloud platforms. Please do not interpret our choice of GCP or any other tool, framework, or service we should mention as an endorsement of its use. There are multiple options available for cloud-based training, each with their own advantages and disadvantages. The best choice for you will greatly depend on the details of your project. Please be sure to reassess the contents of this post against the most up-to-date versions of the APIs and documentation at the time that you read this.

Many thanks to my colleague Yitzhak Levi for his contributions to this post.

Motivation?—?Limitations of Managed Training Services

High-level solutions will typically prioritize ease-of-use and increased accessibility at the cost of reduced control over the underlying flow. Cloud-based managed training services are no different. Along with the convenience (as described above), comes a certain loss of control over the details of the training startup and execution. Here we will mention a few examples which we hope are representative of the types of limitations you might encounter.

1. Limitations on choice of machine type

The variety of machine types offered in managed training services does not always cover all of the machine types supported by the cloud service provider. For example, Amazon SageMaker does not (as of the time of this writing) support training on instance types from their DL1 and Inf2 families. For a variety of reasons (e.g. cost savings) you might need or want to train on these instance types. In such cases you will have no choice but to pass on the luxuries of training with Amazon SageMaker.

2. Limited control over the underlying machine image

Managed training workloads typically run inside a dedicated Docker container. You can rely on the service to choose the most appropriate container, choose a specific container from a list predefined containers built by the service provider (e.g. AWS Deep Learning Containers), or define your own Docker image customized for your specific needs (e.g. see here). But, while you have quite a bit of control over the Docker image container, you have no control over the underlying machine image. In most cases this will not be an issue as Docker is purposely designed to reduce dependence on the host system. However, there are situations, particularly when dealing with specialized HW (as in the case of training), in which we are dependent on specific driver installations in the host image.

3. Limitations on control over multi-node placement

It is common to train large models on multiple machine instances in order to increase the speed of training. In such scenarios, the networking capacity and latency between the machines can have a critical impact on the speed (and cost) of training?—?due to the continuous exchange of data between them. Ideally, we would like the instances to be co-located in the same data center. Unfortunately, (as of the time of this writing), managed services, such Amazon SageMaker, limit your control over device placement. As a result, you might end up with machines in two or more availability zones which could negatively impact your training time.

4. User-privilege limitations

Occasionally, training workloads may require root-level to the system host. For example, AWS recently announced Mountpoint for Amazon S3, a new FUSE-based solution for high throughput access to data storage and a potential method for optimizing the flow of data into your training loop. Unfortunately, this solution can be used only in a Docker environment if your container is run with the --cap-add SYS_ADMIN flag, effectively preventing its use in a managed service setting.

5. Limitations on Docker start-up settings

Some training workloads require the ability to configure particular docker run settings. For example, if your workload stores training data in shared memory (e.g., in /dev/shm) and your data samples are especially large (e.g., high resolution 3D point clouds) you may need to specify an increase in the amount of shared memory allotted to a Docker container. While Docker enables this via the shm-size flag, your managed service might block your ability to control this.

6. Limited access to the training environment

One of the side effects of managed training is the reduced accessibility to the training environment. Sometimes you require the ability to connect directly to your training environment, e.g. for the purposes of debugging failures. When running a managed training job, you essentially relinquish all control to the service including your ability to access your machines at will. Note that some solutions support reduced level access to managed environments assuming appropriate configuration of the training job (e.g. see here).

7. Lack of notification upon Spot Instance termination

Spot instances are unused machines that CSPs often offer at discounted prices. The tradeoff is that these machines can be abruptly taken away from you. When using an unmanaged Spot Instance that is interrupted, you get a termination notice that allows you a bit of time to stop your applications gracefully. If you are running a training workload you can use this advance notification to capture the latest state of you model and copy it to persistent storage so that you can use it to resume later on.

One of the compelling features of managed training services is that they manage the Spot life-cycle for you, i.e., you can choose to train on lower cost Spot Instances and rely on the managed service to automatically resume interrupted jobs when possible. However, when using a Spot Instance via a managed service such as Amazon SageMaker, you do not get a termination notice. Consequently, when you resume your training job it will be from the most recent model state that you captured, not the state of the model at the time of preemption. Depending on how often you capture intermediate checkpoints, this difference can have an impact on your training costs.

Cost Considerations

Another property of managed training services that is worth noting is the additional cost that is often associated with their use. AI development can be an expensive undertaking and you might choose to reduce costs by forgoing the convenience of a managed training service in exchange for a simpler custom solution.

The Kubernetes Alternative

Container orchestration systems are sometimes put forth as an alternative to managed training services. The most popular of these (as of the time of this writing) is Kubernetes. With its high level of auto-scalability and its support for breaking applications into microservices, Kubernetes has become the platform of choice for many modern-application developers. This is particularly true for applications that include complex flows with multiple interdependent components, sometimes referred to as Directed Acyclic Graph (DAG) workflows. Some end-to-end ML development pipelines can be viewed as a DAG workflow (e.g., beginning with data preparation and processing and ending with model deployment). And in fact, Kubernetes-based solutions are often applied to ML pipelines. However, when it comes to the training phase alone (as in the context of this post), where we typically know the precise number and type of instances, it can be argued that Kubernetes provides little added value. The primary disadvantage of Kubernetes is that it usually requires reliance on a dedicated infrastructure team for its ongoing support and maintenance.

Training Management Minimal Requirements

In the absence of a CSP training management service, let’s define some of the basic management features that we would like.

Automatic start-up?—?We would like the training script to run automatically as soon as the instances start up.Automatic instance termination?—?We would like for the machine instances to be automatically terminated as soon as the training script completes so that we do not pay for time when the machines are not in use.Support for multi-instance training?—?We require the ability to start-up a cluster of co-located instances for multi-node training.Persistent logs?—?We would like for training log output to be written to persistent storage so that it can be accessed at will even after the training machines are terminated.Checkpoint capturing?—?We would like for training artifacts, including periodic checkpoints, to be saved to persistent storage.Summary of training jobs— We would like a method for reviewing (and comparing) training experiments.Restart on spot termination (advanced feature)?—?We would like the ability to take advantage of discounted spot instance capacity to reduce training costs without jeopardizing continuity of development.In the next section we will demonstrate how these can be implemented in GCP.

Poor Man’s Managed Training on GCP

In this section we will demonstrate a basic solution for training management on GCP using Google’s gcloud command-line utility (based on Google Cloud SDK version 424.0.0) to create VM instances. We will begin with a simple VM instance creation command and gradually supplement it with additional components that will incorporate our desired management features. Note that the gcloud compute-instances-create command includes a long list of optional flags that control many elements of the instance creation. For the purposes of our demonstration, we will focus only on the controls that are relevant to our solution. We will assume: 1) that your environment has been set up to use gcloud to connect to GCP, 2) that the default network has been appropriately configured, and 3) the existence of a managed service account with access permissions to the GCP resources we will use.

1. Create a VM Instance

The following command will start up a g2-standard-48 VM instance (containing four NVIDIA L4 GPUs) with the public M112 VM image. Alternatively, you could choose a custom image.

gcloud compute instances create test-vm \--zone=us-east1-d \

--machine-type=g2-standard-48 \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--maintenance-policy=TERMINATE

2. Auto-start Training

As soon as the machine instance is up and running, we’d like to automatically start the training workload. In the example below we will demonstrate this by passing a startup script to the gcloud compute-instances-create command. Our startup script will perform a few basic environment setup steps and then run the training job. We start by adjusting the PATH environment variable to point to our conda environment, then download the tarball containing our source code from Google Storage, unpack it, install the project dependencies, and finally run our training script. The demonstration assumes that the tarball has already been created and uploaded to the cloud and that it contains two files: a requirements file (requirements.txt) and a stand-alone training script (train.py). In practice, the precise contents of the startup script will depend on the project.

gcloud compute instances create test-vm \--zone=us-east1-d \

--machine-type=g2-standard-48 \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--maintenance-policy=TERMINATE \

--metadata=startup-script='#! /bin/bash \

export PATH="/opt/conda/bin:$PATH" \

gsutil cp gs://my-bucket/test-vm/my-code.tar . \

tar -xvf my-code.tar \

python3 -m pip install -r requirements.txt \

python3 train.py'

3. Self-destruct on Completion

One of the compelling features of managed training is that you pay only for what you need. More specifically, as soon as your training job is completed, the training instances will be automatically torn down. One way to implement this is to append a self-destruct command to the end of our training script. Note that in order to enable self-destruction the instances need to be created with an appropriate scopes setting. Please see the API documentation for more details.

gcloud compute instances create test-vm \--zone=us-east1-d \

--machine-type=g2-standard-48 \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--maintenance-policy=TERMINATE \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--metadata=startup-script='#! /bin/bash \

export PATH="/opt/conda/bin:$PATH" \

gsutil cp gs://my-bucket/test-vm/my-code.tar . \

tar -xvf my-code.tar \

python3 -m pip install -r requirements.txt \

python3 train.py \

yes | gcloud compute instances delete $(hostname) --zone=zone=us-east1-d'

It is important to keep in mind that despite our intentions, sometimes the instance may not be correctly deleted. This could be the result of a specific error that causes the startup-script to exit early or a stalling process that prevents it from running to completion. We highly recommend introducing a backend mechanism that verifies that unused instances are identified and terminated. One way to do this is to schedule a periodic Cloud Function. Please see our recent post in which we proposed using serverless functions to address this problem when training on Amazon SageMaker.

4. Write Application Logs to Persistent Storage

Given that the instances that we train on are terminated upon completion, we need to ensure that system output is written to persistent logging. This is important for monitoring the progress of the job, investigating errors, etc. In GCP this is enabled by the Cloud Logging offering. By default, output logs are collected for each VM instance. These can be accessed with the Logs Explorer by using the instance id associated with the VM. The following is a sample query for accessing the logs of a training job:

resource.type="gce_instance"resource.labels.instance_id="6019608135494011466"

To be able to query the logs, we need to make sure to capture and store the instance id of each VM. This has to be done before the instance is terminated. In the code block below we use the compute-instances-describe API to retrieve the instance id of our VM. We write the id to a file and upload it to a dedicated metadata folder in the path associated with our project in Google Storage for future reference:

gcloud compute instances describe test-vm \--zone=us-east1-d --format="value(id)" > test-vm-instance-id

gsutil cp test-vm-instance-id gs://my-bucket/test-vm/metadata/

We further populate our metadata folder with the full contents of our compute-instances-create command. This will come in handy later on:

gsutil cp create-instance.sh gs://my-bucket/test-vm/metadata/5. Save Artifacts in Persistent Storage

Importantly, we must ensure that all of the training jobs’ artifacts are uploaded to persistent storage before the instance is terminated. The easiest way to do this is to append a command to our startup script that syncs the entire output folder with Google Storage:

gcloud compute instances create test-vm \--zone=us-east1-d \

--machine-type=g2-standard-48 \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--maintenance-policy=TERMINATE \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--metadata=startup-script='#! /bin/bash \

export PATH="/opt/conda/bin:$PATH" \

gsutil cp gs://my-bucket/test-vm/my-code.tar . \

tar -xvf my-code.tar \

python3 -m pip install -r requirements.txt \

python3 train.py \

gsutil -m rsync -r output gs://my-bucket/test-vm/output \

yes | gcloud compute instances delete $(hostname) --zone=zone=us-east1-d'

The problem with this solution is that it will sync the output only at the end of training. If for some reason our machine crashes due to some error, we might lose all of the intermediate artifacts. A more fault-tolerant solution would involve uploading artifacts to Google Storage (e.g. at fixed training step intervals) throughout the training job.

6. Support Multi-node Training

To run a multi-node training job we can use the compute-instances-bulk-create API to create a group of GPU VMs. The command below will create two g2-standard-48 VM instances.

gcloud compute instances bulk create \--name-pattern="test-vm-#" \

--count=2 \

--region=us-east1 \

--target-distribution-shape=any_single_zone \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--on-host-maintenance=TERMINATE \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--metadata=startup-script='#! /bin/bash \

export MASTER_ADDR=test-vm-1 \

export MASTER_PORT=7777 \

export NODES="test-vm-1 test-vm-2" \

export PATH="/opt/conda/bin:$PATH" \

gsutil cp gs://my-bucket/test-vm/my-code.tar . \

tar -xvf my-code.tar \

python3 -m pip install -r requirements.txt \

python3 train.py \

gsutil -m rsync -r output gs://my-bucket/test-vm/output \

HN="$(hostname)" \

ZN="$(gcloud compute instances list --filter=name=${HN} --format="value(zone)")" \

yes | gcloud compute instances delete $HN --zone=${ZN}'

gcloud compute instances describe test-vm-1 \

--zone=us-east1-d --format="value(id)" > test-vm-1-instance-id

gsutil cp test-vm-1-instance-id gs://my-bucket/test-vm/metadata/

gcloud compute instances describe test-vm-2 \

--zone=us-east1-d --format="value(id)" > test-vm-2-instance-id

gsutil cp test-vm-2-instance-id gs://my-bucket/test-vm/metadata/

There are a few important differences from the single VM creation command:

For bulk creation we specify a region rather than a zone. We chose to force all instances to be in a single zone by setting the target-distribution-shape flag to any_single_zone.We have prepended a number of environment variable definitions to our startup script. These will be used to properly configure the training script to run on all nodes.To delete a VM instance we need to know the zone in which it was created. Since we do not know this when we run our bulk creation command, we need to extract it programmatically in the startup-script.We now capture and store the instance ids of both of the created VMs.In the script below we demonstrate how to use the environment settings to configure data parallel training in PyTorch.

import os, ast, socketimport torch

import torch.distributed as dist

import torch.multiprocessing as mp

def mp_fn(local_rank, *args):

# discover topology settings

gpus_per_node = torch.cuda.device_count()

nodes = os.environ['NODES'].split()

num_nodes = len(nodes)

world_size = num_nodes * gpus_per_node

node_rank = nodes.index(socket.gethostname())

global_rank = (node_rank * gpus_per_node) + local_rank

print(f'local rank {local_rank} '

f'global rank {global_rank} '

f'world size {world_size}')

dist.init_process_group(backend='nccl',

rank=global_rank,

world_size=world_size)

torch.cuda.set_device(local_rank)

# Add training logic

if __name__ == '__main__':

mp.spawn(mp_fn,

args=(),

nprocs=torch.cuda.device_count())

7. Experiment Summary Reports

Managed services typically expose an API and/or a dashboard for reviewing the status and outcomes of training jobs. You will likely want to include a similar feature in your custom management solution. There are many ways to do this including using a Google Cloud Database to maintain training job metadata or using third party tools (such as neptune.ai, Comet, or Weights & Biases) to record and compare training results. The function below assumes that the training application return code was uploaded (from the startup script) to our dedicated metadata folder, and simply iterates over the jobs in Google Storage:

import osimport subprocess

from tabulate import tabulate

from google.cloud import storage

# get job list

p=subprocess.run(["gsutil","ls","gs://my-bucket/"], capture_output=True)

output = p.stdout.decode("utf-8").split("\n")

jobs = [i for i in output if i.endswith("/")]

storage_client = storage.Client()

bucket_name = "my-bucket"

bucket = storage_client.get_bucket(bucket_name)

entries = []

for job in jobs:

blob = bucket.blob(f'{job}/metadata/{job}-instance-id')

inst_id = blob.download_as_string().decode('utf-8').strip()

try:

blob = bucket.blob(f'{job}/metadata/status-code')

status = blob.download_as_string().decode('utf-8').strip()

status = 'SUCCESS' if status==0 else 'FAILED'

except:

status = 'RUNNING'

print(inst_id)

entries.append([job,status,inst_id,f'gs://my-bucket/{job}/my-code.tar'])

print(tabulate(entries,

headers=['Job', 'Status', 'Instance/Log ID', 'Code Location']))

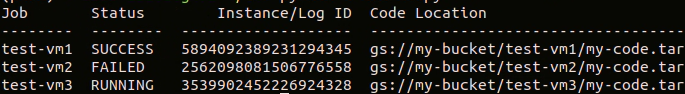

The code above will generate a table with the following format:

Table of Training Experiments (by Author)

Table of Training Experiments (by Author)8. Support Spot Instance Usage

We can easily modify our single-node creation script to use Spot VMs by setting the provisioning-model flag to SPOT and the instance-termination-action flag to DELETE. However, we would like our training management solution to manage the full life-cycle of Spot VM utilization, i.e. to identify Spot preemptions and restart unfinished jobs when possible. There are many ways of supporting this feature. In the example below we define a dedicate Google Storage path, gs://my-bucket/preempted-jobs/ , that maintains a list of names of unfinished training jobs and define a shutdown script to identify a Spot preemption and populate our list with the current job name. Our shutdown script is a stripped down version of the script recommended in the documentation. In practice, you may want to include logic for capturing and uploading the latest model state.

#!/bin/bashMY_PROGRAM="python"

# Find the newest copy of $MY_PROGRAM

PID="$(pgrep -n "$MY_PROGRAM")"

if [[ "$?" -ne 0 ]]; then

echo "${MY_PROGRAM} not running shutting down immediately."

exit 0

fi

echo "Termination in progress registering job..."

gsutil cp /dev/null gs://my-bucket/preempted-jobs/$(hostname)

echo "Registration complete shutting down."

We copy the contents of our shutdown script to a shutdown.sh file and add the metadata-from-file flag with the location of the script.

gcloud compute instances create test-vm \--zone=us-east1-d \

--machine-type=g2-standard-48 \

--image-family=common-cu121-debian-11-py310 \

--image-project=deeplearning-platform-release \

--service-account=my-account@my-project.iam.gserviceaccount.com \

--maintenance-policy=TERMINATE \

--scopes=https://www.googleapis.com/auth/cloud-platform \

--provisioning-model=SPOT \

--instance-termination-action=DELETE \

--metadata-from-file=shutdown-script=shutdown.sh \

--metadata=startup-script='#! /bin/bash \

export PATH="/opt/conda/bin:$PATH" \

gsutil cp gs://my-bucket/test-vm/my-code.tar . \

tar -xvf my-code.tar \

python3 -m pip install -r requirements.txt \

python3 train.py \

gsutil -m rsync -r output gs://my-bucket/test-vm/output \

yes | gcloud compute instances delete $(hostname) --zone=zone=us-east1-d'

Working with shutdown scripts in GCP has some caveats that you should make sure to be aware of. In particular, the precise behavior may vary based on the machine image and other components in the environment. In some cases, you might choose to program the shutdown behavior directly into the machine image (e.g., see here).

The final component to the solution requires a Cloud Function that traverses the list of items in gs://my-bucket/preempted-jobs/ and for each one retrieves the associated initialization command (e.g., gs://my-bucket/test-vm/metadata/create-instance.sh) and attempts to rerun it. If it succeeds, it removes the job name from the list.

import osimport subprocess

from google.cloud import storage

storage_client = storage.Client()

bucket = storage_client.get_bucket('my-bucket')

jobs=bucket.list_blobs(prefix='users/preempted-jobs/')

for job in jobs:

job_name=job.name.split('/')[-1]

job_name='test-vm'

script_path=f'{job_name}/metadata/create-instance.sh'

blob=bucket.blob(script_path)

blob.download_to_filename('script.sh')

os.chmod('script.sh', 0o0777)

p = subprocess.Popen('script.sh', stdout=subprocess.PIPE)

p.wait()

if(p.returncode==0):

job.delete()

The Cloud Function can be programmed to run periodically and/or we can design a mechanism that triggers it from the shutdown script.

Multi-node Spot usage: When applying Spot utilization to multi-node jobs, we need to address the possibility that only a subset of nodes will be terminated. The easiest way to handle this is to program our application to identify when some of the nodes have become unresponsive, discontinue the training loop, and proceed to shut down.

Managed-Training Customization

One of the advantages of building your own managed training solution is your ability to customize it to your specific needs. In the previous section we demonstrated how to design a custom solution for managing the Spot VM life cycle. We can similarly use tools such as Cloud Monitoring Alerts, Cloud Sub/Pub messaging services, and serverless Cloud Functions, to tailor solutions for other challenges, such as cleaning up stalled jobs, identifying under-utilized GPU resources, limiting the overall up-time of VMs, and governing developer usage patterns. Please see our recent post in which we demonstrated how to apply serverless functions to some of these challenges in a managed training setting.

Note that the management solution we have defined addresses each of the limitations of managed training that we listed above:

The gcloud compute-instances-create API exposes all of the VM instance types offered by GCP and allows you to point to the machine image of your choice.The gcloud compute-instances-bulk-create API allows us to start up multiple nodes in a way that ensures that all nodes are co-located in the same zone.Our solution supports running in a non-containerized environment. If you choose to use containers nonetheless, you can configure them with any setting and any user privilege that you want.GCP VMs support SSH access (e.g. via the gcloud compute-ssh command).The Spot VM lifecycle support we described supports capturing and acting on preemption notifications.Having Said All That…

There is no denying the convenience of using managed training services (such as Amazon SageMaker, Google Vertex AI, and Microsoft Azure ML). Not only do they take care of all of the managed requirements we listed above for you, but they typically offer additional features such as hyperparameter optimization, platform optimized distributed training libraries, specialized development environments, and more. Indeed, there are sometimes very good reasons to develop your own management solution, but it may be a good idea to fully explore all opportunities for using existing solutions before taking that path.

Summary

While the cloud can present an ideal domain for developing AI models, an appropriate training management solution is essential for making its use effective and efficient. Although CSPs offer dedicated managed training services, they don’t always align with our project requirements. In this post, we have shown how a simple management solution can be designed by using some of the advanced controls of the lower level, unmanaged, machine instance creation APIs. Naturally, one size does not fit all; the most ideal design will highly depend on the precise details of your AI project(s). But we hope that our post has given you some ideas with which to start.

A Simple Solution for Managing Cloud-Based ML-Training was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.