Welcome to this week’s AI news roundup. We’ve heard rumors this is Elon Musk’s favorite AI news source. This week AI pranked presidents while fiddling with elections. A new kid on the block says OpenAI should shut down and...

Welcome to this week’s AI news roundup. We’ve heard rumors this is Elon Musk’s favorite AI news source.

This week AI pranked presidents while fiddling with elections.

A new kid on the block says OpenAI should shut down and come work for them.

And AI knows when you’re likely to die.

Let’s dig in.

Double trouble

Russian President Vladimir Putin has been dismissive of rumors that he uses body doubles for security. At a live Q&A, Putin was confronted with an AI doppelganger who elicited a range of emotions from Putin when alluding to this. The prankster may have fallen out of a window after this.

On the other side of the AI curtain, US President Joe Biden may have had a biased hand in drafting his AI Executive Order. A look at who’s behind the think-tank RAND gives us an interesting insight into who’s pulling the AI regulation strings.

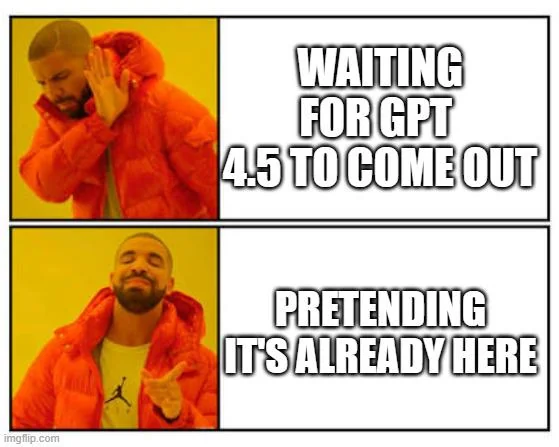

What are we waiting for?

AI enthusiasts have been waiting for big news from OpenAI to be announced before the end of 2023. Will it be GPT-4.5? Will we finally get GPT-5?

Maybe next week. Or maybe not at all, seeing as OpenAI’s board has been given veto rights on any models Sam Altman wants to release. Some are saying that recent improvements on ChatGPT Plus hint at GPT-4.5 already being in action.

Source: imgflip.com

Source: imgflip.com

For now, we have to settle for the first results from OpenAI’s Superalignment project. Ilya Sutskever’s team is trying to work out if humans will be able to train future superintelligent AIs to make sure they don’t go rogue. They found an interesting way to simulate this.

OpenAI expects AGI within the next 10 years. VERSES says its breakthrough could make it happen a lot sooner. The company used a cheeky billboard and an open letter to coax OpenAI to drop what it is doing and join the VERSES project. Weirdly, OpenAI may have to comply if they stick to their word.

OpenAI formed a more dubious partnership with media publisher Axel Springer. It aims to bring news content to ChatGPT but don’t expect unbiased coverage of world events.

If you’re looking for some AI direction in life TomTom is working with Microsoft and OpenAI to bring ChatGPT to your GPS and other in-car systems.

AI in the wild

While AI companies work hard to make their models safer, Eric Hartford removed all the guard rails from Mistral’s latest model and released it publicly. Dolphin Mixtral is a completely uncensored AI model that complies with any request, even illegal ones. Its desire to save kittens is a big motivator.

Commercial AI models will sometimes offer bad advice by accident, but Dolphin Mixtral does it on porpoise.

In other aquatic mammal AI-related news, conservationists are using AI to identify whales from photos. How the whales feel about the intrusion of facial recognition is anyone’s guess.

AI lab rats

Danish researchers built an AI model that knows when you’re going to die. Life insurance companies will love this. When we see the date that the model spits out we may finally consider making some healthier lifestyle choices.

Researchers from ETH Zurich insist they were working hard and not playing games when they taught a robot to master a labyrinth game with machine learning. We’re guessing the mood was a little lighter in this lab than the Danish one.

Scientists have been using AI to design proteins that display exceptional binding strengths to create new drugs that are better than antibodies.

Materials scientists who were tired of laboring through mountains of X-ray diffraction data used convolutional neural networks to fast-track the discovery of new materials.

When scientists use AI to make discoveries they don’t add the AI model’s name to the list of the paper’s authors. Following suit, a UK court decided that AI can’t be named as an inventor for patents. Will this change once AGI is achieved and the machines achieve some sentience? They might politely insist on getting the credit.

On the campaign trail

It’s election season in a few different countries and AI is showing it can lie better than the most seasoned politician. AI deep fake misinformation could have an impact on how voters in the Bangladeshi election vote.

Nearby in Pakistan, former Prime Minister Imran Khan was able to address his supporters even though he was in prison. Using an AI-created avatar of himself, Khan was able to hold a virtual rally. Pakistan’s incumbent leaders weren’t amused.

In the US, a Pennsylvania candidate was the first to use an AI robot called Ashley to call voters. These interactive AI robocalls are cheap and effective, but they raise some tricky ethical questions.

Whoever wins the upcoming US presidential elections may have some novel economic policies to figure out. A US regulator has warned that AI poses a growing risk to financial markets.

Making AI think

Models like ChatGPT are really impressive but they don’t really “think” like we do or come up with new ideas.

Google’s DeepMind has made a major breakthrough in changing that with mathematical machine learning. The FunSearch model comes up with new solutions to math problems that have baffled mathematicians for decades.

Western Sydney University is preparing to switch on its DeepSouth supercomputer. DeepSouth can perform synaptic operations at a rate that matches the estimated operational capacity of the human brain??????. It’s not the biggest supercomputer, but its neuromorphic architecture makes it unique.

Something that didn’t improve this week is the mood of Gemini’s marketing team. Microsoft threw shade at Google’s claim that Gemini is better than GPT-4. Microsoft engineers gave GPT-4 a boost using a new prompting technique called Medprompt. Medprompt sees GPT-4 improve its reasoning and take back the benchmark test crown in a photo finish.

Last week we said GPT-4 was getting a little lazy but it seems much better now. When it was still in holiday mode Ethan Mollick had this gem of an interaction with it. Check out the first line in the code it wrote.

ChatGPT-4 suddenly got very good again for some reason, after being unreliable & a little “dull” for weeks

(Though this might be my favorite interaction: I asked it to create files for me, it insisted it could not, I told it to try, it did, and then look at the top code comment) pic.twitter.com/X1WhtxC96e

— Ethan Mollick (@emollick) December 17, 2023

In other news…

Here are some other clickworthy AI stories we enjoyed this week:

Stability AI released Stable Zero123 which generates quality 3D models from a single image. The Pope has called for a treaty on AI regulation. Scientific journal Nature added AI at number 11 below its list of the 10 humans who have shaped science in 2023. Google announced VideoPoet. A large language model for zero-shot text-to-video generation. ByteDance gets bust using OpenAI data to train its models.And that’s a wrap.

I’m trying to decide whether completely uncensored models like Dolphin Mixtral are a liberating dream come true or a terrifying start to an AI nightmare. It’s out there now so we’ll find out soon enough.

AI misinformation during elections is obviously a bad thing, but could AI make a positive difference in democracies? If we fed ChatGPT all the data on prospective candidates, could it make a better choice in seconds than the one that takes millions of voters days to make?

Could an AGI run for office one day? In case one is reading this, I, for one, welcome our robot overlords.

Let us know what you think and send us links to cool AI stories we may have missed.

The post DAI#18 – Dolphins, doubles, and cheeky AI upstarts appeared first on DailyAI.