Generative AI Video. Stability AI SVD. Meta AI EMU. The Bayesian Brain. Probabilistic ML. UltraFastBERT. Gorilla OpenFunctions. Voyage-01 Embeddings. GAIA. Orca 2. ALERTA-NET.

Generative AI Video. Tinkering around and playing with GenAI in regulated industries like finance, insurance, pharma, or telecomm can cost you a fortune. But in media, marketing, and creative industries you can play with stuff like GenAI video for fun & profit. Here’s the latest on GenAI video.

Stability AI SVD image-to video. This week, Stability AI introduced Stable Video Diffusion (SVD) Image-to-Video, a diffusion model that takes in a still image as a conditioning frame, and generates a video from it. This model was trained to generate 25 frames at resolution 576x1024 given a context frame of the same size, finetuned from SVD Image-to-Video [14 frames]. Checkout the repo, model: Stable Video Diffusion Image-to-Video Model Card.

Meta AI EMU SOTA text-to-video. Ten days ago, Meta AI researchers introduced Emu Video, a state of the art, simple method for text-to-video generation based on diffusion models. Emu Videos a unified model that can generate videos based on a variety of inputs: text only, image only, and both text and image. Checkout project website, demo, paper: Emu Video: Factorizing Text-to-Video Generation by Explicit Image Conditioning.

Baidu VideoGen HD text-to-video. Baidu VideoGen is a text-to-video generation model, that generates high-definition video with high frame fidelity and strong temporal consistency using reference-guided latent diffusion. VideoGen leverages Stable Diffusion, to generate an image with high content quality from the text prompt, as a reference image to guide video generation. Checkout paper, demos: VideoGen: A Reference-Guided Latent Diffusion Approach for High Definition Text-to-Video Generation.

Alibaba I2VGen-XL Video Synthesis. Researchers at Baidu, introduced I2VGen-XL, a model that addresses the scarcity of well-aligned text-video data, the complex inherent structure of videos, and the difficulty of a model to simultaneously ensure semantic and qualitative excellence. was trained on 35 million single-shot text-video pairs and 6 billion text-image pairs. Checkout paper, repo and demos: I2VGen-XL: High-Quality Image-to-Video Synthesis via Cascaded Diffusion Models.

Shanghai AI Lab SEINE generative video transitions. SEINE, is a model that aims to generate high-quality long videos with smooth and creative transitions between scenes and varying lengths of shot-level videos. The model uses a random-mask video diffusion model that auto-generates transitions based on textual descriptions. and different scenes as inputs, combined with text-based control. Checkout paper, model, demos: SEINE: Short-to-Long Video Diffusion Model for Generative Transition and Prediction.

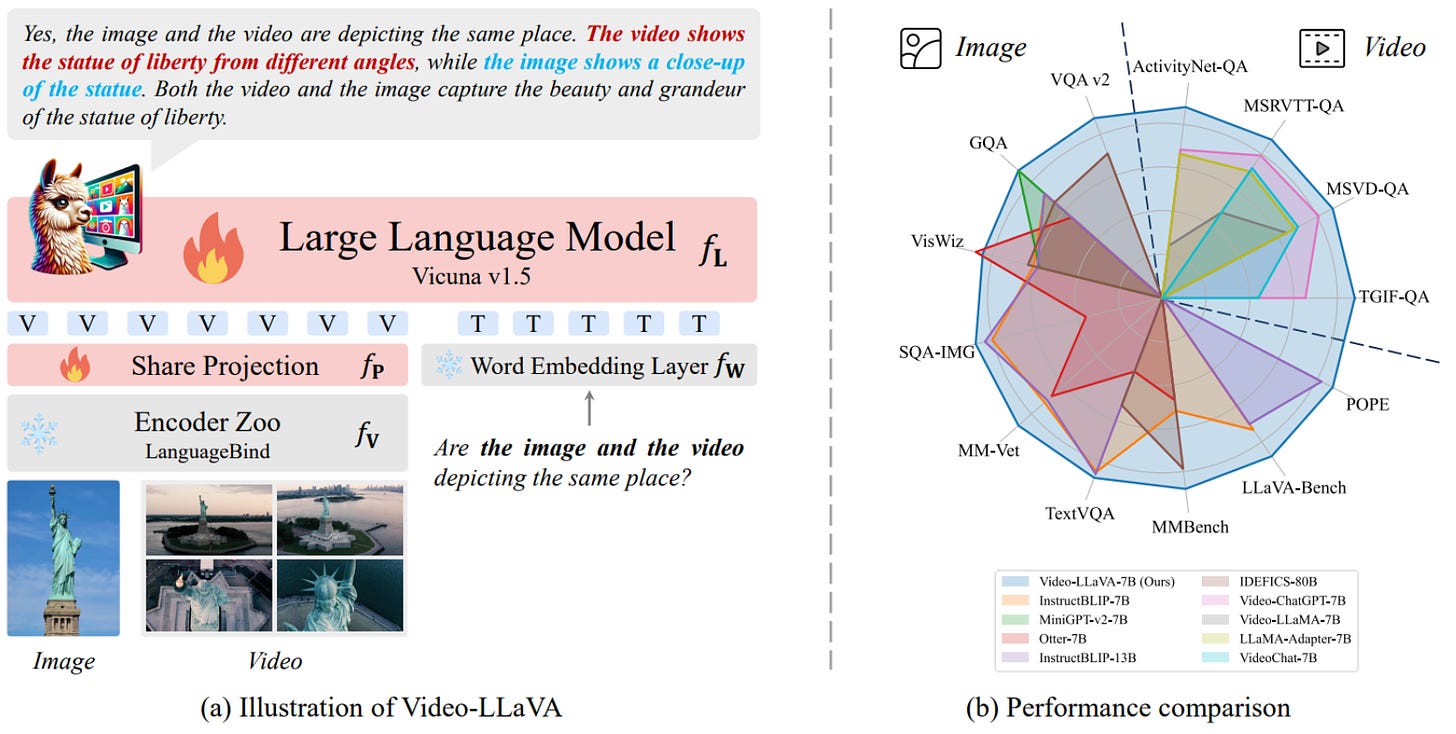

PKU-YUAN Video-LLaVA video-image understanding. This is a Large Vision-Language Model (LVLM) for visual-language understanding that learns from a mixed dataset of images and videos, mutually enhancing each other. Video-LLaVA unifies visual representation in a single mode, and outperforms models designed specifically for images or videos. Checkout paper, repo and demo: Video-LLaVA: Learning United Visual Representation by Alignment Before Projection.

Some AI activities for the weekend. The weather in London is cold and miserable. And I bet you’re sick & tired of reading about the OpenAI drama, and all the paid PR/ media propaganda on the Q* breakthrough, super AGI achieved, and AI existential risk. So don’t venture outside, instead:

Play Death by AI, a survival party game. Can you survive my judgment and cheat death? Invite your friends to the game. Describe a deadly scenario. Enter a survival strategy. AI decides if you survive.

Join The Church of AI. At some point AI will have God-like powers. Experience what happens when an intelligent machine is able to expand its intelligence exponentially for eternity.

Learn about Q-Learning. All of the sudden, there are so many “experts” talking about Q-learning! It’s worthwhile to teach them a lesson.

Have a nice week.

10 Link-o-Troned

LeCun: “Ignore the Deluge of Complete Nonsense about Q* ”

“Natural Intelligence is All You Need [tm]”

The Bayesian Brain & Probabilistic Reasoning

ML and The Economics Team @Instacart

[free course] Probabilistic ML, 2023

Intro to Voyage-01 Embeddings - A New SOTA Model

Explaining the Stable Diffusion XL Latent Space

Scalable Causal ML for Personalisations @Uber

The Hitchhiker's Guide from CoT Reasoning to Language Agents

[free] The ML Engineering Online Book for Language & Vision Models

the ML Pythonista

Gorilla OpenFunctions - An Open source Alt to GPT-4 Functions

UltraFastBERT - 78x Faster than BERT

Intel neural-chat-7B v3: The New Leader in Small, Open LLMs

Deep & Other Learning Bits

Hands-On Deep Q-Learning (blog, repo)

Intro to Equivariant Neural Nets ?–? What, Why and How??

Diffuse, Attend, and Segment - Zero Shot Segmentation

AI/ DL ResearchDocs

Meta AI et. al - GAIA: A Benchmark for General AI Assistants

MS Research - Orca 2: Teaching Small LMs to Reason (paper, repo)

ALERTA-Net: A Temporal Distance-Aware RNN for Predicting Stocks Movements

data v-i-s-i-o-n-s

TensorSpace - Interactive, 3D Visualisations of Neural Nets

How I Improved Strava Training Log Visualisations (blog, code)

[free book] "Graphic presentation" (1939) – A Classic on Info Visualization

MLOps Untangled

Huggingface Model to Sagemaker: Auto MLOps with ZenML

How to Build a Feature Store: A Comprehensive Guide

Version Control & Rollback Strategies for ML Models with Modelbit

AI startups -> radar

Munch - AI for Increasing Video Engagement in Social Media

Forward CarePods - AI-Powered Self-Healthcare Pods

Aptus - AI-powered Machine Readable Financial Regulations

ML Datasets & Stuff

NVIDIA HelpSteer - 37K Prompts with Responses & Human Annotations

PolitiFact-Oslo: A New Dataset for Fake News Analysis & Detection

No Robots - 10K Instructions Created by Skilled Human Annotators

Postscript, etc

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.