I recently attended a talk by Kevin Clarke (CS224n) where he talked about the future trends in NLP. I am writing this post to summarize and discuss the recent trends. Slide snippets are from his guest lecture. There are...

I recently attended a talk by Kevin Clarke (CS224n) where he talked about the future trends in NLP. I am writing this post to summarize and discuss the recent trends. Slide snippets are from his guest lecture.

There are 2 primary topics that lay down the trends for NLP with Deep Learning:

1. Pre-Training using Unsupervised/Unlabeled data

2. OpenAI GPT-2 breakthrough

1. Pre-Training using Unsupervised/Unlabeled data

Supervised data is expensive and limited, how can we use Unsupervised data to supplement training with supervised fine-tuning to do better?

Let's apply this to the problem of Machine Translation and see how this helps -

If you have 2 corpus of text (transcriptions or wikipedia articles) in different languages with no cross language mapping.

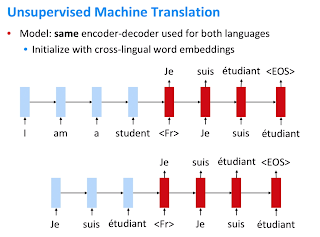

We can use this for pre-training, train an encoder and decoder LSTM (without attention) individually on both corpus and fit them together in a model and fine-tune over a labeled dataset.

How does this help? Both the encoder and decoder LSTMs here have learnt the notion of their respective language distributions and are good as generative models for each of their languages. When you put them together (#2) the model learn to use the compressed representation and map them from source to target languages. Pre-training is equivalent to "smart" initialization in some way. Let's take this further.

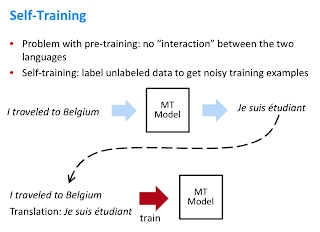

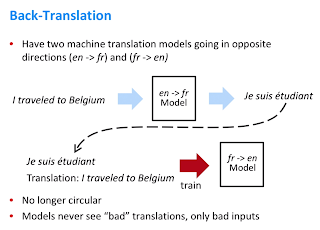

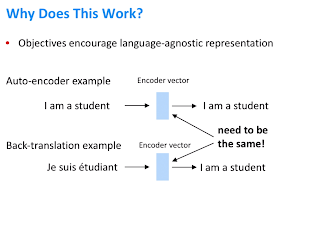

The issue with pre-training is the network was not originally trained to learn a source to destination mapping. Let's see how Self-Training applied as Back-Translation helps here, we use a NMT (Neural Machine Translation) model to translate from English to French and then feed the output NMT1 as a reverse value pair to NMT2. Meaning, given the output of NMT1, model 2 learns how to generate the input. This is more of "augmented" supervised data where given noisy input of another model, your network is learning to predict the input of the prior model.

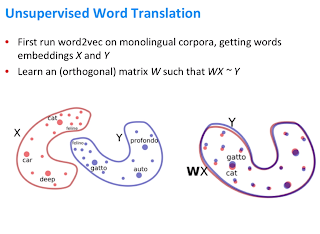

So far so good, let's look into a simpler approach to perform NMT with zero labeled data. This is done by learning word vectors in both the languages, the good thing about word vectors is they have inherent structure. This allows us to learn an affine mapping between the two languages a weight (W) in the image below.

Words with similar meanings are going to be mapped pretty close in embedding space between languages. Now using these on our previous encoder and decoder, we can train the network to produce translation between languages since we now know the meaning of words in both the languages.

A summary of the objective functions of the optimization:

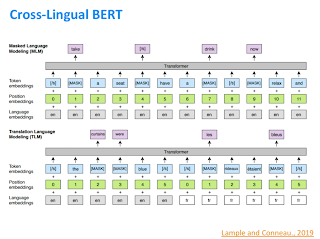

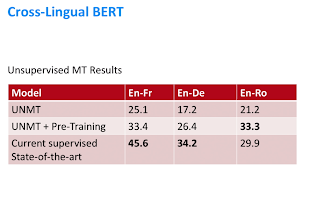

Recently, the research team at Facebook augmented the language masking training objective of BERT to not only mask words in a language but provide the network inputs in 2 languages (supervised) and mask words in one. Thus, making it learn meaning between languages - this provided a significant performance improvement and the paper is titled Cross-Lingual BERT

2. GPT-2

Next, let's look at OpenAI's GPT-2 model, this is basically a HUGE model (1.5 billion parameters) trained on quality data (high rated links on Reddit).

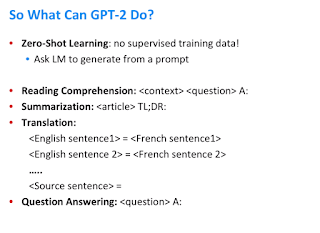

GPT-2 is trained as a language model but was evaluated on many tasks i.e. evaluated as a Zero Shot Model.

(Zero shot learning means trying to do a task without ever training on it.)

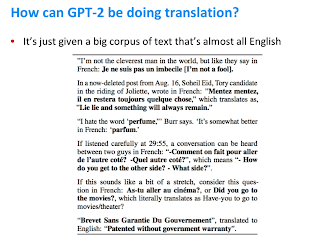

Now the question is, how did we use GPT-2 perform translation even though it was never explicitly trained to perform translation? Answer to the question can be seen in the corpus snippet below - quotes are translated in a bunch of the input data into another language, the model smartly learns between both the languages a mapping which it uses inherently to perform translation.

Models have continued to grow in size and perform better on Vision and Language tasks. How long will the trend continue is a question we look forward to answer in future :)