Text generation using GPT-2 is quite easy, using the right tools. Learn how to do it, as well as how to fine-tune the model on your own dataset.

Natural Language Generation (NLG) is a well studied subject among the NLP community. With the rise of deep learning methods, NLG has become better and better. Recently, OpenAI has pushed the limits, with the release of GPT-2 - a Transformers based model that predicts the next token at each time space.

Nowadays it’s quite easy to use these models - you don’t need to implement the code yourself, or train the models using expensive resources. HuggingFace, for instance, has released an API that eases the access to the pretrained GPT-2 OpenAI has published. Some of its features include generating text, as well as fine-tuning the model on your own dataset - shifting the learned distribution so that the model will generate text from a new domain.

Doing all of these is easy - it’s only a matter of pip installing the relevant packages and launching a python script. However, to save you the trouble, you could use one of the available platforms such as Spell - you just specify what you want to run, and Spell will take care of the rest (download the code, install the packages, allocate compute resources, manage results).

While not being a Spell advocate (I haven’t even tried other features of the platform, or tried other platforms at all), I decided to write a tutorial that details the process I’ve just described. To find out more, you can find the tutorial here.

If you also like to play around with machine generated text, feel free to leave a comment with interesting texts you got. :)

UPDATE: it seems the tutorial is no longer available in the aforementioned link. Although it’s a bit outdated (the hugging face API has changed a lot since then), here is the full text:

Natural Language Generation (NLG) is a well studied subject among the NLP community. One approach to tackle the challenge of text generation is to factorize the probability of a sequence of tokens (e.g. words or Byte Pair Encoding) \(P(x_1, \ldots, x_n)\) into the multiplication of the probabilities of getting each of the tokens \(x_1\), …, \(x_n\) conditioned on the tokens preceding it: \(\prod_{t=1}^{n}P(x_t|x_{<t})\). Given a training dataset, one could train such a model to maximize the probability of the next token at each time step. Once the model has been trained, you could generate text by sampling from the distribution one token at a time. Easy as a breeze.

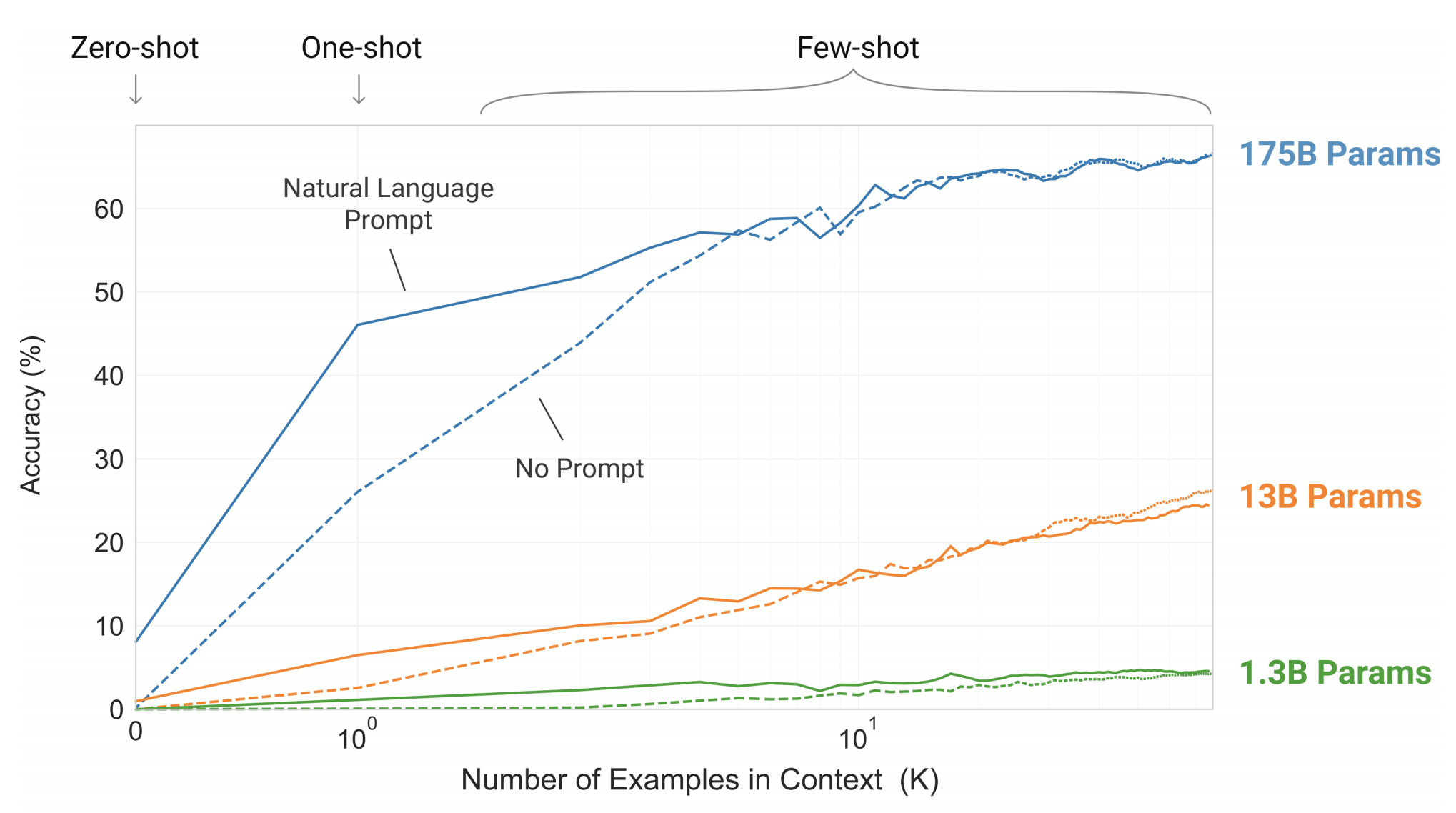

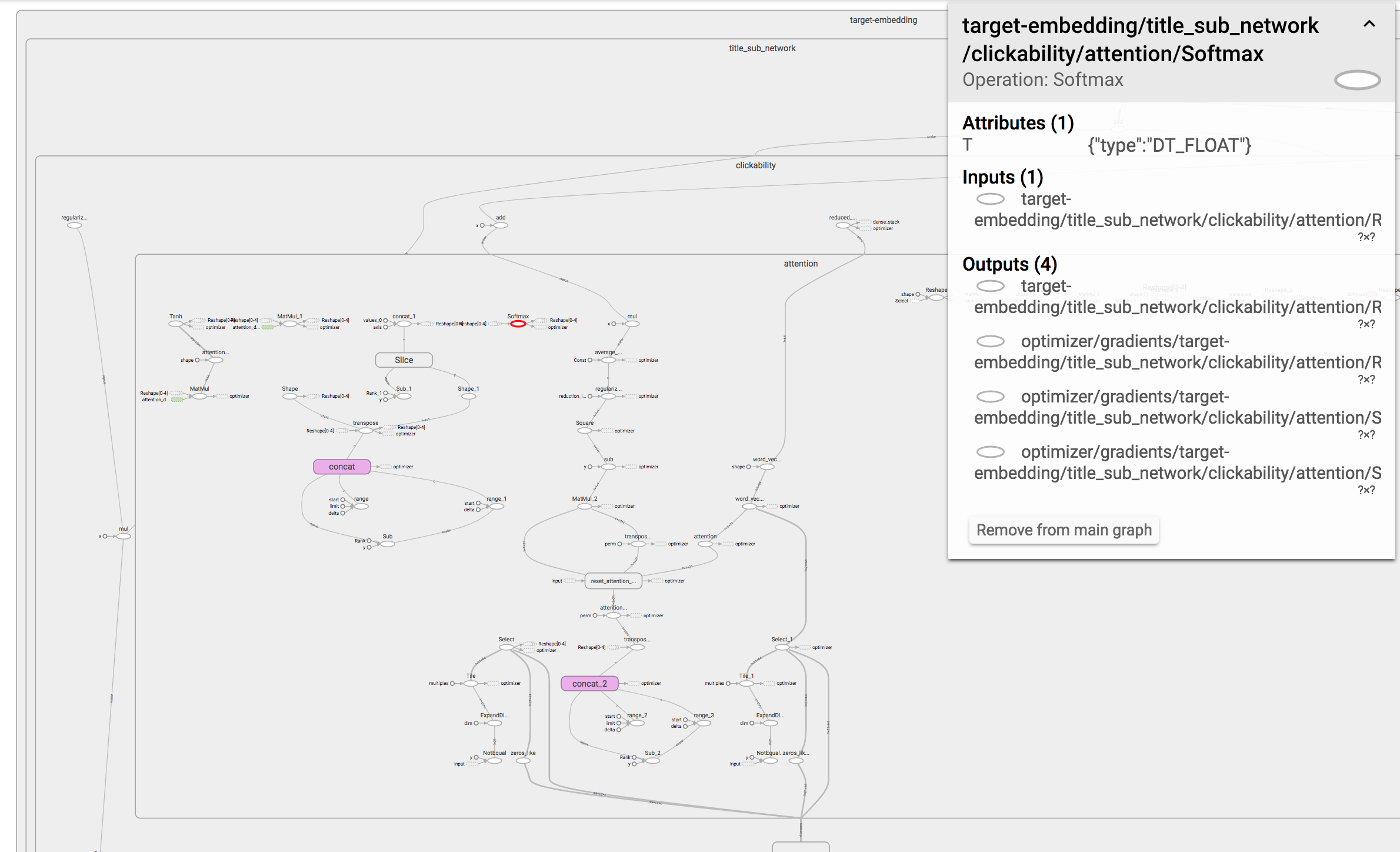

With the rise of deep learning methods, NLG has become better and better. Recently, OpenAI have pushed the limits, with the release of GPT-2. This model uses the well known Transformers architecture: in order to calculate the distribution over the next token, the model simultaneously uses the previous tokens using a self-attention mechanism.

Recently, HuggingFace have released an API easing the access to GPT-2. One of its features is generating text using the pre-trained model:

That was easy! OpenAI have used diverse data found on the web for training the model, so the generated text can be pretty much any natural looking text. But what if instead of diversity, we’d like to generate a specific kind of text? Let’s try to generate jokes! To do so, we’ll have to train the model using a dataset of jokes. Unfortunately, getting such a dataset would be ridiculously hard! To train GPT-2, which has 124M weights to be learned (and this is merely the smaller version of the architecture), we need a huge amount of data! But how are we going to get that many jokes? The short answer is: we won’t.

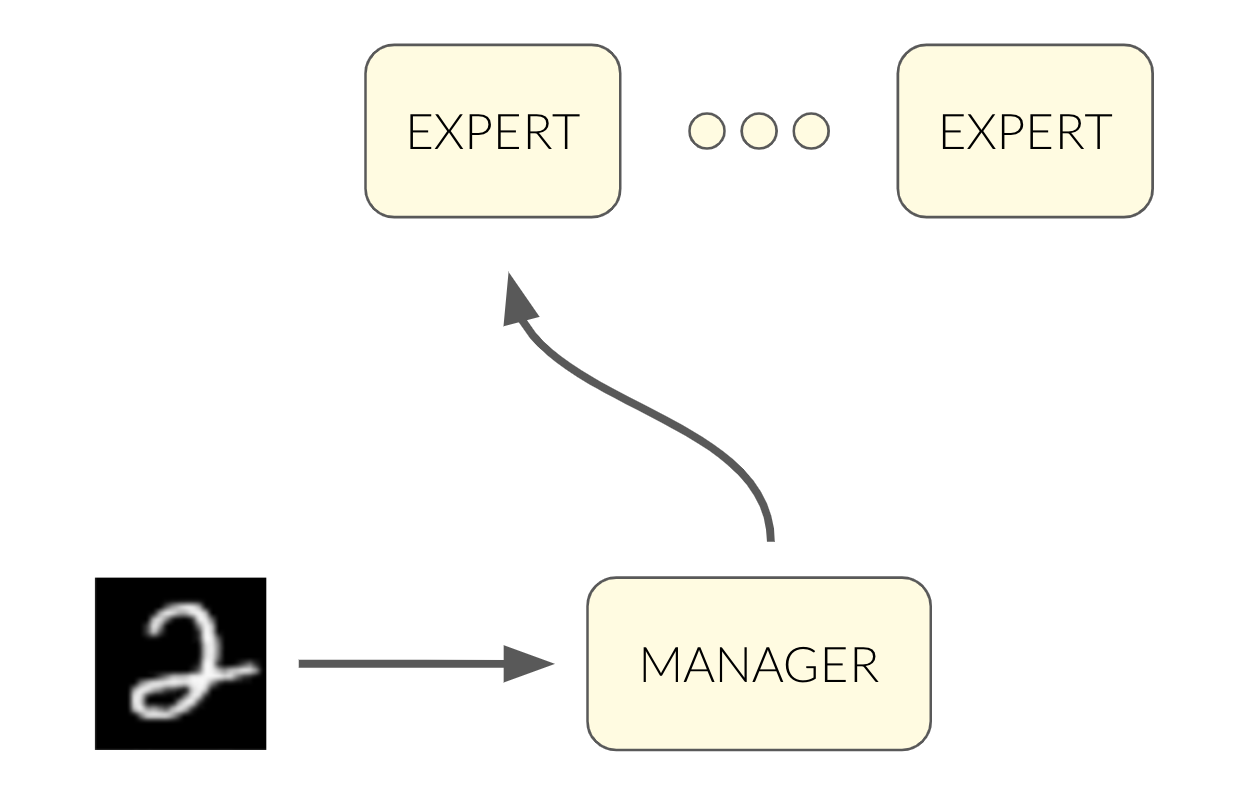

Learning to generate jokes involves learning how to generate natural-looking text, as well as making sure this text is funny. The first part is where most of the learning happens. Using the pre-trained version of GPT-2 as a starting point, the model won’t have to learn how to generate natural-looking text from scratch. All it’ll have to learn is to concentrate the distribution over text that is funny. A relatively small dataset will do for the task.

Don’t get me wrong, the dataset we’ll be using isn’t big enough to meaningfully learn anything useful. Moreover, training a model to generalize the concept of humor is a hard problem. However, for the purpose of this post - learning how to use and fine-tune a model such as GPT-2 - this will do: we’ll witness how the dataset shifts the model’s distribution towards text that looks, to some extent, like jokes.

We’ll use one-liner jokes from short-jokes-dataset to fine-tune GPT-2. Being shorter than the average joke, it’ll be easier for the model to learn their distribution. So first thing’s first, let’s get the data:

HuggingFace have already provided us with a script to fine-tune GPT-2:

Note that the downloaded data from the previous run is mounted using the -m flag. Even though we’ve used a small dataset (3K examples), running 10 epochs on a CPU took about 44 hours. It only shows how big the model is. This is why you should use a GPU if you want to use a bigger dataset or run many experiments (e.g. tune hyper parameters).

Let’s try to generate a joke, after mounting the result of the previous run:

The model has learned to generate short sentences, which is typical for our dataset. This relatively easy to grasp data statistic was well learned! Regarding how funny the model is - well… I’ll leave you to judge!