GoodAI and AI Roadmap InstituteTokyo, ARAYA headquarters, October 13, 2017Authors: Marek Rosa, Olga Afanasjeva, Will Millership (GoodAI)Workshop participants: Olga Afanasjeva (GoodAI), Shahar Avin (CSER), Vlado Bužek (Slovak Academy of Science), Stephen Cave (CFI), Arisa Ema (University of Tokyo), Ayako Fukui...

GoodAI and AI Roadmap Institute

Tokyo, ARAYA headquarters, October 13, 2017

Authors: Marek Rosa, Olga Afanasjeva, Will Millership (GoodAI)

Workshop participants: Olga Afanasjeva (GoodAI), Shahar Avin (CSER), Vlado Bužek (Slovak Academy of Science), Stephen Cave (CFI), Arisa Ema (University of Tokyo), Ayako Fukui (Araya), Danit Gal (Peking University), Nicholas Guttenberg (Araya), Ryota Kanai (Araya), George Musser (Scientific American), Seán Ó hÉigeartaigh (CSER), Marek Rosa (GoodAI), Jaan Tallinn (CSER, FLI), Hiroshi Yamakawa (Dwango AI Laboratory)

Summary

It is important to address the potential pitfalls of a race for transformative AI, where:

Key stakeholders, including the developers, may ignore or underestimate safety procedures, or agreements, in favor of faster utilizationThe fruits of the technology won’t be shared by the majority of people to benefit humanity, but only by a selected fewRace dynamics may develop regardless of the motivations of the actors. For example, actors may be aiming to develop a transformative AI as fast as possible to help humanity, to achieve economic dominance, or even to reduce costs of development.

There is already an interest in mitigating potential risks. We are trying to engage more stakeholders and foster cross-disciplinary global discussion.

We held a workshop in Tokyo where we discussed many questions and came up with new ones which will help facilitate further work.

The General AI Challenge Round 2: Race Avoidance will launch on 18 January 2018, to crowdsource mitigation strategies for risks associated with the AI race.

What we can do today:

Study and better understand the dynamics of the AI raceFigure out how to incentivize actors to cooperateBuild stronger trust in the global community by fostering discussions between diverse stakeholders (including individuals, groups, private and public sector actors) and being as transparent as possible in our own roadmaps and motivationsAvoid fearmongering around both AI and AGI which could lead to overregulationDiscuss the optimal governance structure for AI development, including the advantages and limitations of various mechanisms such as regulation, self-regulation, and structured incentivesCall to action?—?get involved with the development of the next round of the General AI ChallengeIntroduction

Research and development in fundamental and applied artificial intelligence is making encouraging progress. Within the research community, there is a growing effort to make progress towards general artificial intelligence (AGI). AI is being recognized as a strategic priority by a range of actors, including representatives of various businesses, private research groups, companies, and governments. This progress may lead to an apparent AI race, where stakeholders compete to be the first to develop and deploy a sufficiently transformative AI [1,2,3,4,5]. Such a system could be either AGI, able to perform a broad set of intellectual tasks while continually improving itself, or sufficiently powerful specialized AIs.

“Business as usual” progress in narrow AI is unlikely to confer transformative advantages. This means that although it is likely that we will see an increase in competitive pressures, which may have negative impacts on cooperation around guiding the impacts of AI, such continued progress is unlikely to spark a “winner takes all” race. It is unclear whether AGI will be achieved in the coming decades, or whether specialised AIs would confer sufficient transformative advantages to precipitate a race of this nature. There seems to be less potential of a race among public actors trying to address current societal challenges. However, even in this domain there is a strong business interest which may in turn lead to race dynamics. Therefore, at present it is prudent not to rule out any of these future possibilities.

The issue has been raised that such a race could create incentives to neglect either safety procedures, or established agreements between key players for the sake of gaining first mover advantage and controlling the technology [1]. Unless we find strong incentives for various parties to cooperate, at least to some degree, there is also a risk that the fruits of transformative AI won’t be shared by the majority of people to benefit humanity, but only by a selected few.

We believe that at the moment people present a greater risk than AI itself, and that AI risks-associated fearmongering in the media can only damage constructive dialogue.

Workshop and the General AI Challenge

GoodAI and the AI Roadmap Institute organized a workshop in the Araya office in Tokyo, on October 13, 2017, to foster interdisciplinary discussion on how to avoid pitfalls of such an AI race.

Workshops like this are also being used to help prepare the AI Race Avoidance round of the General AI Challenge which will launch on 18 January 2018.

The worldwide General AI Challenge, founded by GoodAI, aims to tackle this difficult problem via citizen science, promote AI safety research beyond the boundaries of the relatively small AI safety community, and encourage an interdisciplinary approach.

Why are we doing this workshop and challenge?

With race dynamics emerging, we believe we are still at a time where key stakeholders can effectively address the potential pitfalls.

Primary objective: find a solution to problems associated with the AI raceSecondary objective: develop a better understanding of race dynamics including issues of cooperation and competition, value propagation, value alignment and incentivisation. This knowledge can be used to shape the future of people, our team (or any team), and our partners. We can also learn to better align the value systems of members of our teams and alliancesIt’s possible that through this process we won’t find an optimal solution, but a set of proposals that could move us a few steps closer to our goal.

This post follows on from a previous blogpost and workshop Avoiding the Precipice: Race Avoidance in the Development of Artificial General Intelligence [6].

Topics and questions addressed at the workshop

General question: How can we avoid AI research becoming a race between researchers, developers, companies, governments and other stakeholders, where:

Safety gets neglected or established agreements are defiedThe fruits of the technology are not shared by the majority of people to benefit humanity, but only by a selected fewAt the workshop, we focused on:

Better understanding and mapping the AI race: answering questions (see below) and identifying other relevant questionsDesigning the AI Race Avoidance round of the General AI Challenge (creating a timeline, discussing potential tasks and success criteria, and identifying possible areas of friction)We are continually updating the list of AI race-related questions (see appendix), which will be addressed further in the General AI Challenge, future workshops and research.

Below are some of the main topics discussed at the workshop.

1) How can we better understand the race?

Create and understand frameworks for discussing and formalizing AI race questionsIdentify the general principles behind the race. Study meta-patterns from other races in history to help identify areas that will need to be addressedUse first-principle thinking to break down the problem into pieces and stimulate creative solutionsDefine clear timelines for discussion and clarify the motivation of actorsValue propagation is key. Whoever wants to advance, needs to develop robust value propagation strategiesResource allocation is also key to maximizing the likelihood of propagating one’s valuesDetailed roadmaps with clear targets and open-ended roadmaps (where progress is not measured by how close the state is to the target) are both valuable tools to understanding the race and attempting to solve issuesCan simulation games be developed to better understand the race problem? Shahar Avin is in the process of developing a “Superintelligence mod” for the video game Civilization 5, and Frank Lantz of the NYU Game Center came up with a simple game where the user is an AI developing paperclips2) Is the AI race really a negative thing?

Competition is natural and we find it in almost all areas of life. It can encourage actors to focus, and it lifts up the best solutionsThe AI race itself could be seen as a useful stimulusIt is perhaps not desirable to “avoid” the AI race but rather to manage or guide itIs compromise and consensus good? If actors over-compromise, the end result could be too diluted to make an impact, and not exactly what anyone wantedUnjustified negative escalation in the media around the race could lead to unnecessarily stringent regulationsAs we see race dynamics emerge, the key question is if the future will be aligned with most of humanity’s values. We must acknowledge that defining universal human values is challenging, considering that multiple viewpoints exist on even fundamental values such as human rights and privacy. This is a question that should be addressed before attempting to align AI with a set of values3) Who are the actors and what are their roles?

Who is not part of the discussion yet? Who should be?The people who will implement AI race mitigation policies and guidelines will be the people working on them right nowMilitary and big companies will be involved. Not because we necessarily want them to shape the future, but they are key stakeholdersWhich existing research and development centers, governments, states, intergovernmental organizations, companies and even unknown players will be the most important?What is the role of media in the AI race, how can they help and how can they damage progress?Future generations should also be recognized as stakeholders who will be affected by decisions made todayRegulation can be viewed as an attempt to limit the future more intelligent or more powerful actors. Therefore, to avoid conflict, it’s important to make sure that any necessary regulations are well thought-through and beneficial for all actors4) What are the incentives to cooperate on AI?

One of the exercises at the workshop was to analyze:

What are motivations of key stakeholders?What are the levers they have to promote their goals?What could be their incentives to cooperate with other actors?One of the prerequisites for effective cooperation is a sufficient level of trust:

How do we define and measure trust?How can we develop trust among all stakeholders?—?inside and outside the AI community?Predictability is an important factor. Actors who are open about their value system, transparent in their goals and ways of achieving them, and who are consistent in their actions, have better chances of creating functional and lasting alliances.

5) How could the race unfold?

Workshop participants put forward multiple viewpoints on the nature of the AI race and a range of scenarios of how it might unfold.

As an example, below are two possible trajectories of the race to general AI:

Winner takes all: one dominant actor holds an AGI monopoly and is years ahead of everyone. This is likely to follow a path of transformative AGI (see diagram below).Example: Similar technology advantages have played an important role in geopolitics in the past. For example, by 1900 Great Britain, with only 40 million people, managed to capitalise the advantage of technological innovation creating an empire of about one quarter of the Earth’s land and population [7].

Co-evolutionary development: many actors on similar level of R&D racing incrementally towards AGI.Example: This direction would be similar to the first stage of space exploration when two actors (the Soviet Union and the United States) were developing and successfully putting in use a competing technology.

Other considerations:

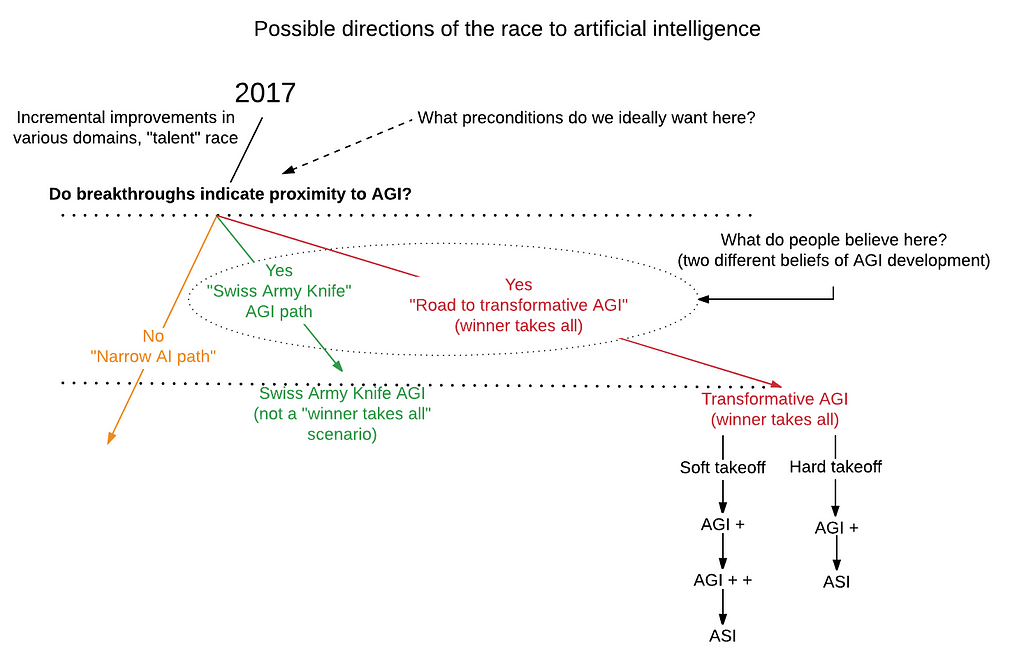

We could enter a race towards incrementally more capable narrow AI (not a “winner takes all” scenario: grab AI talent)We are in multiple races to have incremental leadership on different types of narrow AI. Therefore we need to be aware of different risks accompanying different racesThe dynamics will be changing as different races evolveThe diagram below explores some of the potential pathways from the perspective of how the AI itself might look. It depicts beliefs about three possible directions that the development of AI may progress in. Roadmaps of assumptions of AI development, like this one, can be used to think of what steps we can take today to achieve a beneficial future even under adversarial conditions and different beliefs.

Click here for full-size image

Click here for full-size imageLegend:

Transformative AGI path: any AGI that will lead to dramatic and swift paradigm shifts in society. This is likely to be a “winner takes all” scenario.Swiss Army Knife AGI path: a powerful (can be also decentralized) system made up of individual expert components, a collection of narrow AIs. Such AGI scenario could mean more balance of power in practice (each stakeholder will be controlling their domain of expertise, or components of the “knife”). This is likely to be a co-evolutionary path.Narrow AI path: in this path, progress does not indicate proximity to AGI and it is likely to see companies racing to create the most powerful possible narrow AIs for various tasks.Current race assumption in 2017

Assumption: We are in a race to incrementally more capable narrow AI (not a “winner takes all” scenario: grab AI talent)

Counter-assumption: We are in a race to “incremental” AGI (not a “winner takes all” scenario)Counter-assumption: We are in a race to recursive AGI (winner takes all)Counter-assumption: We are in multiple races to have incremental leadership on different types of “narrow” AIForeseeable future assumption

Assumption: At some point (possibly 15 years) we will enter a widely-recognised race to a “winner takes all” scenario of recursive AGI

Counter-assumption: In 15 years, we continue incremental (not a “winner takes all” scenario) race on narrow AI or non-recursive AGICounter-assumption: In 15 years, we enter a limited “winner takes all” race to certain narrow AI or non-recursive AGI capabilitiesCounter-assumption: The overwhelming “winner takes all” is avoided by the total upper limit of available resources that support intelligenceOther assumptions and counter-assumptions of race to AGI

Assumption: Developing AGI will take a large, well-funded, infrastructure-heavy project

Counter-assumption: A few key insights will be critical and they could come from small groups. For example, Google Search which was not invented inside a well known established company but started from scratch and revolutionized the landscapeCounter-assumption: Small groups can also layer key insights onto existing work of bigger groupsAssumption: AI/AGI will require large datasets and other limiting factors

Counter-assumption: AGI will be able to learn from real and virtual environments and a small number of examples the same way humans canAssumption: AGI and its creators will be easily controlled by limitations on money, political leverage and other factors

Counter-assumption: AGI can be used to generate money on the stock marketAssumption: Recursive improvement will proceed linearly/diminishing returns (e.g. learning to learn by gradient descent by gradient descent)

Counter-assumption: At a certain point in generality and cognitive capability, recursive self-improvement may begin to improve more quickly than linearly, precipitating an “intelligence explosion”Assumption: Researcher talent will be key limiting factor in AGI development

Counter-assumption: Government involvement, funding, infrastructure, computational resources and leverage are all also potential limiting factorsAssumption: AGI will be a singular broad-intelligence agent

Counter-assumption: AGI will be a set of modular components (each limited/narrow) but capable of generality in combinationCounter-assumption: AGI will be an even wider set of technological capabilities than the above6) Why search for AI race solution publicly?

Transparency allows everyone to learn about the topic, nothing is hidden. This leads to more trustInclusion?—?all people from across different disciplines are encouraged to get involved because it’s relevant to every person aliveIf the race is taking place, we won’t achieve anything by not discussing it, especially if the aim is to ensure a beneficial future for everyoneFear of an immediate threat is a big motivator to get people to act. However, behavioral psychology tells us that in the long term a more positive approach may work best to motivate stakeholders. Positive public discussion can also help avoid fearmongering in the media.

7) What future do we want?

Consensus might be hard to find and also might not be practical or desirableAI race mitigation is basically an insurance. A way to avoid unhappy futures (this may be easier than maximization of all happy futures)Even those who think they will be a winner may end up second, and thus it’s beneficial for them to consider the race dynamicsIn the future it is desirable to avoid the “winner takes all” scenario and make it possible for more than one actor to survive and utilize AI (or in other words, it needs to be okay to come second in the race or not to win at all)One way to describe a desired future is where the happiness of each next generation is greater than the happiness of a previous generationWe are aiming to create a better future and make sure AI is used to improve the lives of as many people as possible [8]. However, it is difficult to envisage exactly what this future will look like.

One way of envisioning this could be to use a “veil of ignorance” thought experiment. If all the stakeholders involved in developing transformative AI assume they will not be the first to create it, or that they would not be involved at all, they are likely to create rules and regulations which are beneficial to humanity as a whole, rather than be blinded by their own self interest.

AI Race Avoidance challenge

In the workshop we discussed the next steps for Round 2 of the General AI Challenge.

About the AI Race Avoidance round

Although this post has used the title AI Race Avoidance, it is likely to change. As discussed above, we are not proposing to avoid the race but rather to guide, manage or mitigate the pitfalls. We will be working on a better title with our partners before the release.The round has been postponed until 18 January 2018. The extra time allows more partners, and the public, to get involved in the design of the round to make it as comprehensive as possible.The aim of the round is to raise awareness, discuss the topic, get as diverse an idea pool as possible and hopefully to find a solution or a set of solutions.Submissions

The round is expected to run for several months, and can be repeatedDesired outcome: next-steps or essays, proposed solutions or frameworks for analyzing AI race questionsSubmissions could be very open-endedSubmissions can include meta-solutions, ideas for future rounds, frameworks, convergent or open-ended roadmaps with various level of detailSubmissions must have a two page summary and, if needed, a longer/unlimited submissionNo limit on number of submissions per participantJudges and evaluation

We are actively trying to ensure diversity on our judging panel. We believe it is important to have people from different cultures, backgrounds, genders and industries representing a diverse range of ideas and valuesThe panel will judge the submissions on how they are maximizing the chances of a positive future for humanitySpecifications of this round are work in progressNext steps

Prepare for the launch of AI Race Avoidance round of the General AI Challenge in cooperation with our partners on 18 January 2018Continue organizing workshops on AI race topics with participation of various international stakeholdersPromote cooperation: focus on establishing and strengthening trust among the stakeholders across the globe. Transparency in goals facilitates trust. Just like we would trust an AI system if its decision making is transparent and predictable, the same applies to humansCall to action

At GoodAI we are open to new ideas about how AI Race Avoidance round of the General AI Challenge should look. We would love to hear from you if you have any suggestions on how the round should be structured, or if you think we have missed any important questions on our list below.

In the meantime we would be grateful if you could share the news about this upcoming round of the General AI Challenge with anyone you think might be interested.

Appendix

More questions about the AI race

Below is a list of some more of the key questions we will expect to see tackled in Round 2: AI Race Avoidance of the General AI Challenge. We have split them into three categories: Incentive to cooperate, What to do today, and Safety and security.

Incentive to cooperate:

How to incentivise the AI race winner to obey any related previous agreements and/or share the benefits of transformative AI with others?What is the incentive to enter and stay in an alliance?We understand that cooperation is important in moving forward safely. However, what if other actors do not understand its importance, or refuse to cooperate? How can we guarantee a safe future if there are unknown non-cooperators?Looking at the problems across different scales, the pain points are similar even at the level of internal team dynamics. We need to invent robust mechanisms for cooperation between: individual team members, teams, companies, corporations and governments. How do we do this?When considering various incentives for safety-focused development, we need to find a robust incentive (or a combination of such) that would push even unknown actors towards beneficial AGI, or at least an AGI that can be controlled. How?What to do today:

How to reduce the danger of regulation over-shooting and unreasonable political control?What role might states have in the future economy and which strategies are they assuming/can assume today, in terms of their involvement in AI or AGI development?With regards to the AI weapons race, is a ban on autonomous weapons a good idea? What if other parties don’t follow the ban?If regulation overshoots by creating unacceptable conditions for regulated actors, the actors may decide to ignore the regulation and bear the risk of potential penalties. For example, total prohibition of alcohol or gambling may lead to displacement of the activities to illegal areas, while well designed regulation can actually help reduce the most negative impacts such as developing addiction.AI safety research needs to be promoted beyond the boundaries of the small AI safety community and tackled interdisciplinarily. There needs to be active cooperation between safety experts, industry leaders and states to avoid negative scenarios. How?Safety and security:

What level of transparency is optimal and how do we demonstrate transparency?Impact of openness: how open shall we be in publishing “solutions” to the AI race?How do we stop the first developers of AGI becoming a target?How can we safeguard against malignant use of AI or AGI?Related questions

What is the profile of a developer who can solve general AI?Who is a bigger danger: people or AI?How would the AI race winner use the newly gained power to dominate existing structures? Will they have a reason to interact with them at all?Universal basic income?Is there something beyond intelligence? Intelligence 2.0End-game: convergence or open-ended?What would an AGI creator desire, given the possibility of building an AGI within one month/year?Are there any goods or services that an AGI creator would need immediately after building an AGI system?What might be the goals of AGI creators?What are the possibilities of those that develop AGI first without the world knowing?What are the possibilities of those that develop AGI first while engaged in sharing their research/results?What would make an AGI creator share their results, despite having the capability of mass destruction (e.g. Internet paralysis) (The developer’s intentions might not be evil, but his defense to “nationalization” might logically be a show of force)Are we capable of creating such a model of cooperation in which the creator of an AGI would reap the most benefits, while at the same time be protected from others? Does a scenario exist in which a software developer monetarily benefits from free distribution of their software?How to prevent usurpation of AGI by governments and armies? (i.e. an attempt at exclusive ownership)References

[1] Armstrong, S., Bostrom, N., & Shulman, C. (2016). Racing to the precipice: a model of artificial intelligence development. AI & SOCIETY, 31(2), 201–206.

[2] Baum, S. D. (2016). On the promotion of safe and socially beneficial artificial intelligence. AI and Society (2011), 1–9.

[3] Bostrom, N. (2017). Strategic Implications of Openness in AI Development. Global Policy, 8(2), 135–148.

[4] Geist, E. M. (2016). It’s already too late to stop the AI arms race?—?We must manage it instead. Bulletin of the Atomic Scientists, 72(5), 318–321.

[5] Conn, A. (2017). Can AI Remain Safe as Companies Race to Develop It?

[6] AI Roadmap Institute (2017). AVOIDING THE PRECIPICE: Race Avoidance in the Development of Artificial General Intelligence.

[7] Allen, Greg, and Taniel Chan. Artificial Intelligence and National Security. Report. Harvard Kennedy School, Harvard University. Boston, MA, 2017.

[8] Future of Life Institute. (2017). ASILOMAR AI PRINCIPLES developed in conjunction with the 2017 Asilomar conference.

Other links:

https://www.roadmapinstitute.org/https://www.general-ai-challenge.org/Report from the AI Race Avoidance Workshop was originally published in AI Roadmap Institute Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.