Earlier this month during Apple’s annual developer conference, WWDC 2022, the company gave developers the first look at improvements coming to Apple’s ARKit 6 toolkit for building AR apps on iOS devices. Though Apple has yet to reveal (or...

Earlier this month during Apple’s annual developer conference, WWDC 2022, the company gave developers the first look at improvements coming to Apple’s ARKit 6 toolkit for building AR apps on iOS devices.

Though Apple has yet to reveal (or even confirm) the existence of an AR headset, the clearest indication the company is absolutely serious about AR is ARKit, the developer toolkit for building AR apps on iOS devices which Apple has been advancing since 2017.

At WWDC 2022 Apple revealed the latest version, ARKit 6, which is bringing improvements to core capabilities so developers can build better AR apps for iPhones and iPads (and eventually headsets… probably).

Image courtesy Apple

Image courtesy Apple

During the ‘Discover ARKit 6’ developer session at WWDC 2022, Apple ARKit Engineer Christian Lipski, overviewed what’s next.

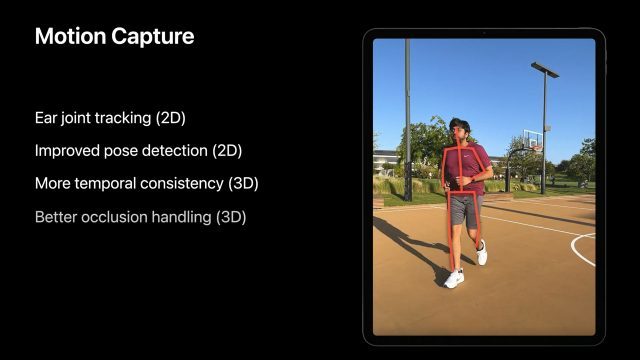

Better Motion Capture

ARKit includes a MotionCapture function which tracks people in the video frame, giving developers a ‘skeleton’ which estimates the position of the person’s head and limbs. This allows developers to create apps which overlay augmented things onto the person, or moves them relative to the person (it can also be used for occlusion to place augmented content behind someone to more realistically embed it into the scene).

In ARKit 6, Lipski says the function is getting a “whole suite of updates,” including improved tracking of 2D skeletons which now estimate the location of the subject’s left and right ears (which will surely be useful for face-filters, trying on glasses with AR, and similar functions involving the head).

Image courtesy Apple

Image courtesy Apple

As for 3D skeletons, which gives a pose estimation with depth, Apple is promising better tracking with less jitter, more temporal consistency, and more robustness when the user is occluded by the edge of the camera or other objects (though some of these enhancements are only available on iPhone 12 and up).

Camera Access Improvements

Image courtesy Apple

Image courtesy Apple

ARKit 6 gives developers much more control over the device’s camera while it’s being used with an AR app for tracking.

Developers can now access incoming frames in real-time up to 4K at 30FPS on the iPhone 11 and up, and the latest iPad Pro (M1). The prior mode, which uses a lower resolution but higher framerate (60FPS), is still available to developers. Lipski says developers should carefully consider which mode to use. The 4K mode might be better for apps focused on previewing or recording video (like a virtual production app), but the lower resolution 60FPS mode might be better for apps that benefit from responsiveness, like games.

Similar to higher video resolution during an AR app, developers can now take full resolution photos even while an AR app is actively using the camera. That means they can pluck out a 12MP image (on an iPhone 13 anyway) to be saved or used elsewhere. This could be great for an AR app where capturing photos is part of the experience. For instance, Lipski says, an app where users are guided through taking photos of an object to later be converted into a 3D model with photogrammetry.

ARKit 6 also gives developers more control over the camera while it’s being used by an AR app. Developers can adjust things like white balance, brightness, and focus as needed, and can read EXIF data from every incoming frame.

More Location Anchor… Locations

Image courtesy Apple

Image courtesy Apple

ARKit includes LocationAnchors which can provide street-level tracking for AR in select cities (for instance, to do augmented reality turn-by-turn directions). Apple is expanding this functionality to more cities, now including Vancouver, Toronto, and Montreal in Canada; Fukuoka, Hiroshima, Osaka, Kyoto, Nagoya, Yokohama, and Tokyo in Japan; and Singapore.

Later this year the function will further expand to Auckland, New Zealand; Tel Aviv-Yafo, Israel; and Paris, France.

Plane Anchors

Plane Anchors are a tool for tracking flat objects like tables, floors, and walls during an AR session. Prior to ARKit 6, the origin of a Plane Anchor would be updated as more of the plane was discovered (for instance, moving the device to reveal more of a table than the camera saw previously). This could make it difficult to keep augmented objects locked in place on a plane if the origin was rotated after first being placed. With ARKit 6, the origin’s rotation remains static no matter how the shape of the plane might change during the session.

– – — – –

ARKit 6 will launch with the iOS 16 update which is available now in beta for developers and is expected to be release to the public this Fall.

The post Apple Reveals Improvements Coming in ARKit 6 for Developers appeared first on Road to VR.