Richard Bradshaw explores fears around the use of AI in test automation shared during his session�The Fear Factor�at Future of Testing. The post Should We Fear AI in Test Automation? appeared first on Automated Visual Testing | Applitools.

At the recent Future of Testing: AI in Automation event hosted by Applitools, I ran a session called �The Fear Factor� where we safely and openly discussed some of our fears around the use of AI in test automation. At this event, we heard from many thought leaders and experts in this domain who shared their experiences and visions for the future. AI in test automation is already here, and its presence in test automation tooling will only increase in the very near future, but should we fear it or embrace it?

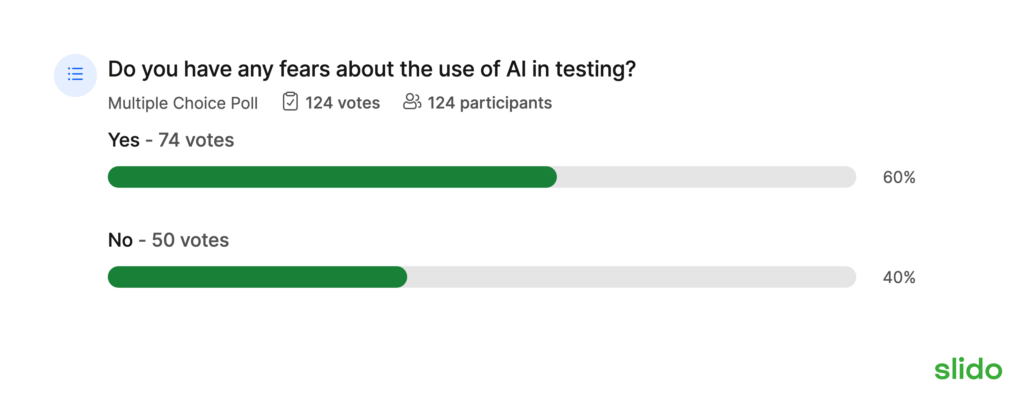

During my session, I asked the attendees three questions:

Do you have any fears about the use of AI in testing? In one word, describe your feelings when you think about AI and testing. If you do have fears about the use of AI in testing, describe them.Do you have any fears about the use of AI in testing?

Where do you sit?

I�m in the Yes camp, and let me try to explain why.

Fear can mean many things, but one of them is the threat of harm. It�s that which concerns me in the software testing space. But that harm will only happen if teams/companies believe that AI alone can do a good enough job. If we start to see companies blindly trusting AI tools for all their testing efforts, I believe we�ll see many critical issues in production. It�s not that I don�t believe AI is capable of doing great testing�it�s more the fact that many testers struggle to explain their testing, so to have good enough data to train such a model feels distant to me. Of course, not all testing is equal, and I fully expect to see many AI-based tools doing some of the low-hanging fruit testing for us.

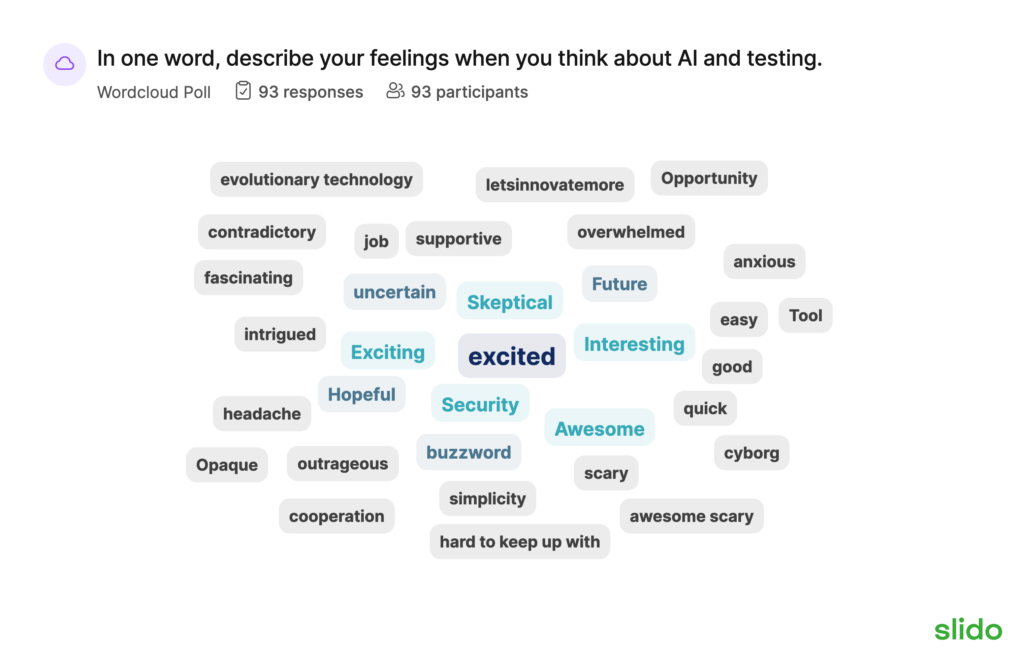

In one word, describe your feelings when you think about AI and testing.

It�s hard to disagree with the results from this question�if I were to pick two myself, I would have gone with �excited and skeptical.� I�m excited because we seem to be seeing new developments and tools each week. On top of that, though, we are starting to see developments in tooling using AI outside of the traditional automation space, and that really pleases me. Combine that with the developments we are seeing in the automation space, such as autonomous testing, and the future tooling for testing looks rather exciting.

That said, though, I�m a tester, so I�m skeptical of most things. I�ve seen several testing tools now that are making some big promises around the use of AI, and unfortunately, several that are talking about replacing or needing fewer testers. I�m very skeptical of such claims. If we pause and look across the whole of the technology industry, the most impactful use of AI thus far is in assisting people. Various GPTs help generate all sorts of artifacts, such as code, copy, and images. Sometimes, it�s good enough, but the majority of the time is helping a human be more efficient�this use of AI and such messaging, excites me.

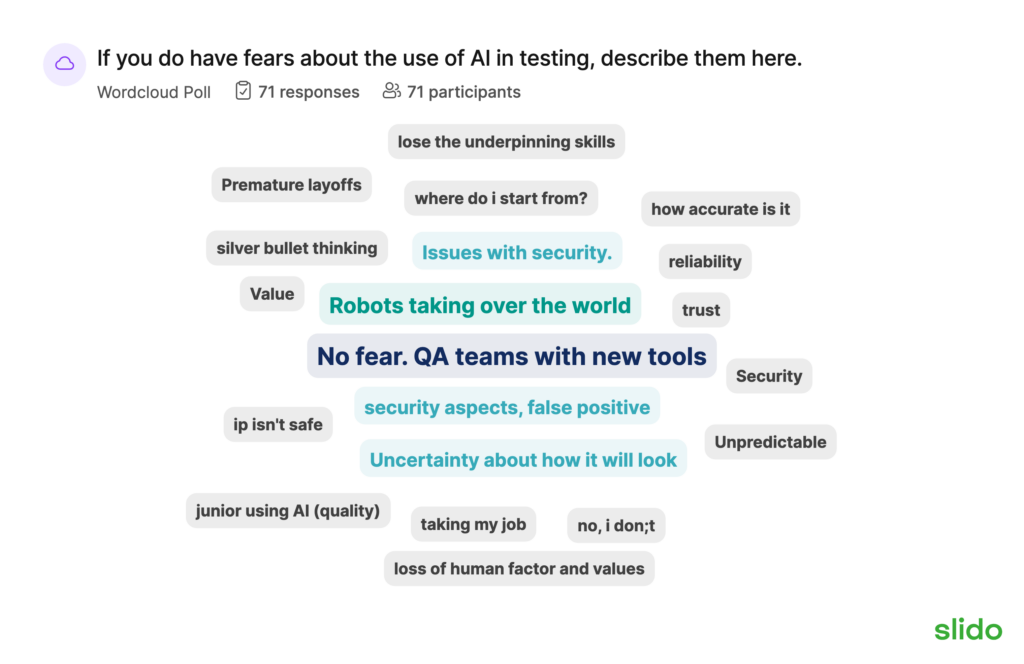

If you do have fears about the use of AI in testing, describe them here.

We got lots of responses to this question, but I�m going to summarise and elaborate on four of them:

Job security Learning curve Reliability & security How it looksJob Security

Several attendees shared they were concerned about AI replacing their jobs. Personally, I can�t see this happening. We had the same concern with test automation, and that never really materialized. Those automated tests don�t maintain themselves, or write themselves, or share the results themselves. The direction shared by Angie Jones in her talk Where Is My Flying Car?! Test Automation in the Space Age, and Tariq King in his talk, Automating Quality: A Vision Beyond AI for Testing, is AI that assists the human, giving them superpowers. That�s the future I hope, and believe we�ll see, where we are able to do our testing a lot more efficiently by having AI assist us. Hopefully, this means we can release even quicker, with higher quality for our customers.

Another concern shared was about skills that we�ve spent years and a lot of effort learning, suddenly being replaced by AI. Or significantly easier with AI. I think this is a valid concern but also inevitable. We�ve already seen AI have a significant benefit to developers with tools like GitHub Copilot. However, I�ve got a lot of experience with Copilot, and it only really helps when you know what to ask for�this is the same with GPTs. Therefore, I think the core skills of a tester will be crucial, and I can�t see AI replacing those.

Learning Curve

If we are going to be adding all these fantastic AI tools into our tool belts, I feel it�s going to be important we all have a basic understanding of AI. This concern was shared by the attendees. For me, if I�m going to be trusting a tool to do testing for me or generating test artefacts for me, I definitely want that basic understanding. So, that poses the question, where are we going to get this knowledge from?

On the flip side of this, what if we become over-reliant on these new AI tools? A concern shared by attendees was that the next generation of testers might not have some of the core skills we consider important today. Testers are known for being excellent thinkers and practitioners of critical thinking. If the AI tools are doing all this thinking for us, we run the risk of those skills losing their focus and no longer being taught. This could lead to us being over-reliant on such tools, but also the tools biassing the testing that we do. But given that the community is focusing on this already, I feel it�s something we can plan to mitigate and ensure this doesn�t happen.

Reliability & Security

Data, data, data. A lot of fears were shared over the use and collection of data. The majority of us work on applications where data, security, and integrity are critical. I absolutely share this concern. I�m no AI expert, but the best AI tools I�ve used thus far are ones that are contextual to my domain/application, and to do that, we need to train it on our data. These could lead to data bleeding and private data, and that is a huge challenge I think the AI space has yet to solve.

One of the huge benefits of AI tooling is that it�s always learning and, hopefully, improving. But that brings a new challenge to testing. Usually, when we create an automated test, we are codifying knowledge and behavior, to create something that is deterministic, we want it to do the same thing over and over again. This provides consistent feedback. However, with an AI-based tool it won�t always do the same thing over and over again�it will try and apply its intelligence, and here�s where the reliability issues come in. What it tested last week may not be the same this week, but it may give us the same indicator. This, for me, emphasizes the importance of basic AI knowledge but also that we use these tools as an assistant to our human skills and judgment.

How It Looks

Several attendees shared concerns about how these AI tools are going to look. Are they going to a completely black box, where we enter a URL or upload an app and just click Go? Then the tool will tell us pass or fail, or perhaps it will just go and log the bugs for us. I don�t think so. As per Angie�s and Tariq�s talk I mentioned before, I think it�s more likely these tools will focus on assistance.�

These tools will be incredibly powerful and capable of doing a lot of testing very quickly. However, what they�ll struggle to do is to put all the information they find into context. That�s why I like the idea of assistance, a bunch of AI robots going off and collecting information for me. It�s then up to me to process all that information and put it into the context of the product. The best AI tool is going to be the one that makes it as easy as possible to process the masses of information these tools are going to return.

Imagine you point an AI bot at your website, and within minutes, it�s reporting accessibility issues to you, performance issues, broken links, broken buttons, layout issues, and much more. It�s going to be imperative that we can process that information as quickly as possible to ensure these tools continue to support us and don�t drown us in information.

Visit the Future of Testing: AI in Automation archive

In summary, AI is here, and more is coming. It�s very exciting times in the software testing tooling space, and I�m really looking forward to playing with more new tools. I think we need to be curious with these new tools, try them, and see what sticks. The more tools we have in our tool belts, the more options we have to solve our ever-increasing complex testing challenges.�

The post Should We Fear AI in Test Automation? appeared first on Automated Visual Testing | Applitools.