Tesla must deploy a fix in over two millions cars in the US after a regulator found its Autopilot system doesn't do enough to prevent misuse.

The National Highway Traffic Safety Administration (NHTSA) has determined Tesla’s Autopilot technology is defective.

The US regulator has published a recall notice following a two-year investigation on crashes that occurred while the technology was being used.

Tesla must now fix the issue in 2,031,220 vehicles across the Model 3, Model Y, Model S and Model X lines.

The company is applying an over-the-air (OTA) software update to fix the Autopilot system without owners needing to take their cars to a service centre. However, the NHTSA still considers this action a recall.

It’s unclear if Australian Teslas will receive the same update.

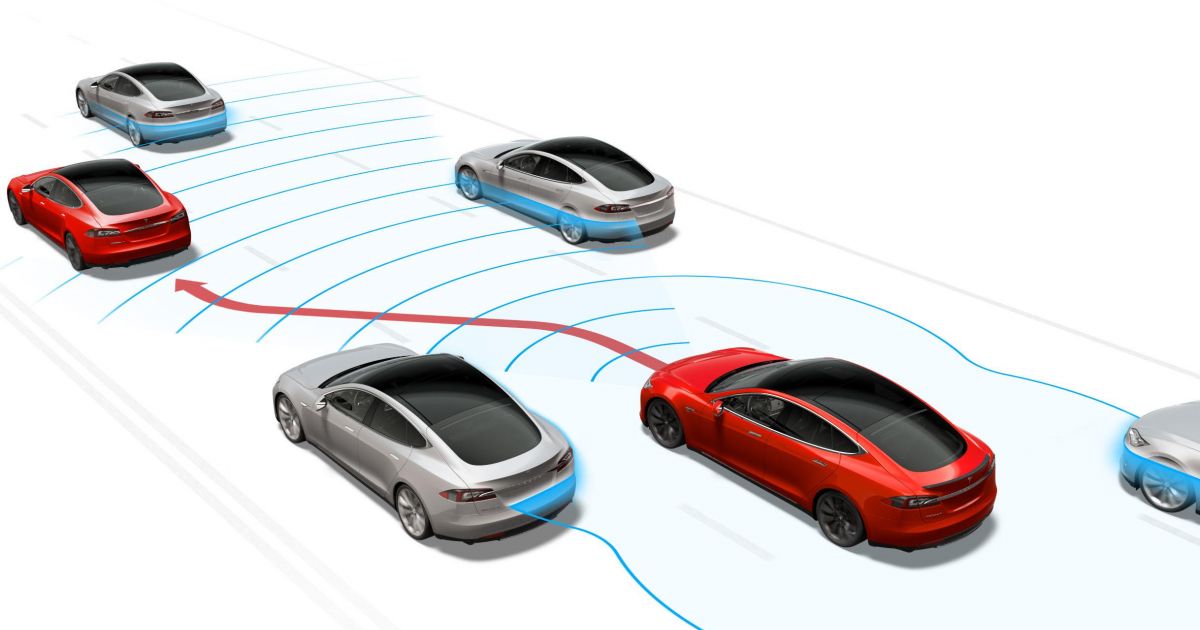

Tesla’s Autopilot is considered a Level 2 autonomous driver assistance technology under the levels of autonomy defined by The Society of Automotive Engineers (SAE).

This means Tesla’s Autopilot system can steer, brake, and accelerate by itself, but still requires the driver to keep their hands on or near the steering wheel, and be alert to the current situation.

The driver should intervene if they believe the car is able to do something illegal or dangerous.

To determine if the driver has proper control, Tesla measures torque applied to the vehicle’s steering wheel by the driver.

If the car detects the driver is not holding the wheel for long periods of time, the system will prompt the driver to take over.

Despite this, the NHTSA has found that “the prominence and scope of the feature’s controls may not be sufficient to prevent driver misuse of the SAE Level 2 advanced driver-assistance feature”.

According to the report, Tesla did not concur with the NHTSA’s findings, but agreed to address the agency’s concerns regardless.

The report concludes detailing the measures Tesla will take to fix its Autopilot system.

“The remedy will incorporate additional controls and alerts to those already existing on affected vehicles to further encourage the driver to adhere to their continuous driving responsibility whenever Autosteer is engaged,” it reads.

It says that, depending on vehicle hardware, additional controls will include more visible alerts on the user interface, simplified engagement of Autosteer, additional checks once this is engaged and being used outside controlled access highways, and an eventual deactivation of Autosteer if the driver isn’t operating the vehicle responsibly.

The recall comes shortly after a former Tesla employee claimed the US electric vehicle (EV) manufacturer’s self-driving technology is unsafe for use on public roads.

As reported by the BBC, Lukasz Krupski said he has concerns about how AI is being used to power Tesla’s Autopilot self-driving technology.

“I don’t think the hardware is ready and the software is ready,” said Mr Krupski.

“It affects all of us because we are essentially experiments in public roads. So even if you don’t have a Tesla, your children still walk in the footpath.”

Mr Krupski claims he found evidence in company data that suggested requirements for the safe operation of cars with a certain level of semi-autonomous driving technology hadn’t been followed.

These developments also come as a US judge ruled there is “reasonable evidence” that Tesla CEO Elon Musk and other managers knew about dangerous defects with the company’s Autopilot system.

A Florida lawsuit was brought against Tesla after a fatal crash in 2019, where the Autopilot system on a Model 3 failed to detect a truck crossing in front of the car.

Stephen Banner was killed when his Model 3 crashed into an 18-wheeler truck that had turned onto the road ahead of him, shearing the roof off the Tesla.

Despite this Tesla had two victories in Californian court cases earlier this year.

Micah Lee’s Model 3 was alleged to have suddenly veered off a highway in Los Angeles while travelling 65mph (104km/h) while Autopilot was active, striking a palm tree and bursting into flames, all in a span of a few seconds.

Additionally, Tesla won a lawsuit against Los Angeles resident Justine Hsu, who claimed her Model S swerved into a kerb with Autopilot active.

In both cases, Tesla was cleared of any wrongdoing.

The US National Highway Traffic Safety Administration (NHTSA) has opened more than 36 investigations into Tesla crashes, with 23 of these crashes involving fatalities.